Exploring Defensive Challenges with Artificial Intelligence: From Traditional to Generative

Recently, generative artificial intelligence (GenAI) has emerged as a game-changer in cybersecurity, significantly enhancing traditional security operations. Its applications range from helping analysts in understanding complex topics during incidents to deciphering intricate scripts, and summarizing findings effectively. With the appropriate fine-tuning, GenAI models can even suggest investigative steps. This advancement is particularly impressive as it enables even junior analysts to handle complex investigations with ease.

While the progress in GenAI is exciting, we should not forget that traditional artificial intelligence (TAI) is still key and still drives core detection capabilities of many enterprises. This does not mean GenAI is less important. Instead, it shows why it is essential to understand both. Knowing when and how to utilize each, and recognizing how they complement each other, is crucial for the effective application of AI in cybersecurity.

In this blog post, I'll explore various artificial intelligence (AI) methods and their evolving roles in cybersecurity. A key aspect of this exploration is understanding how these AI methods integrate into the incident response life cycle, an essential framework in cybersecurity. This serves as a foundation for my upcoming series, where I'll delve deeper into different AI algorithms.

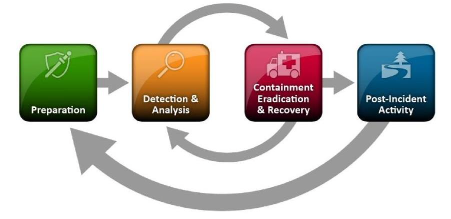

The Incident Response Life Cycle

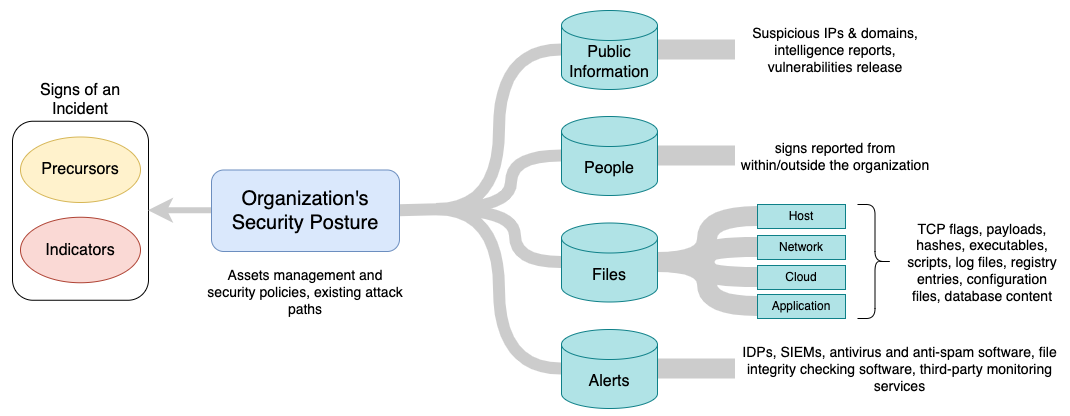

To assist organizations in establishing incident response capabilities and handling incidents effectively, the National Institute of Standards and Technology (NIST) introduced a structured incident response process (outlined in their special publication 800-61 - NIST-SP-800-61-r2) that includes four main stages. Among all the activities recommended by the framework, detecting, assessing, and confirming potential incidents pose a great challenge for many organizations. Some of the reasons are the multiple sources of alerts, high volume of potential signs of incidents, and the technical expertise needed for incident data analysis (Cichonski et al., 2012). This will be the focus in this blog post.

Incidents typically exhibit signs falling into two categories, precursors and indicators. According to Cichonski et al. (2012), a precursor is a sign that an incident may occur in the future; an indicator is a sign that an incident may have occurred or may be occurring now. These signs are often discernible through various sources of data like log files, threat intelligence, alerts produced by security controls, and an organization's security posture.

Detecting and Analyzing Incident signs... an AI Approach

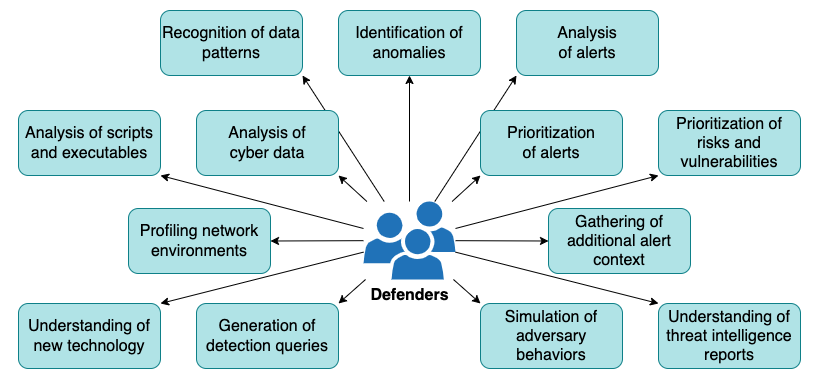

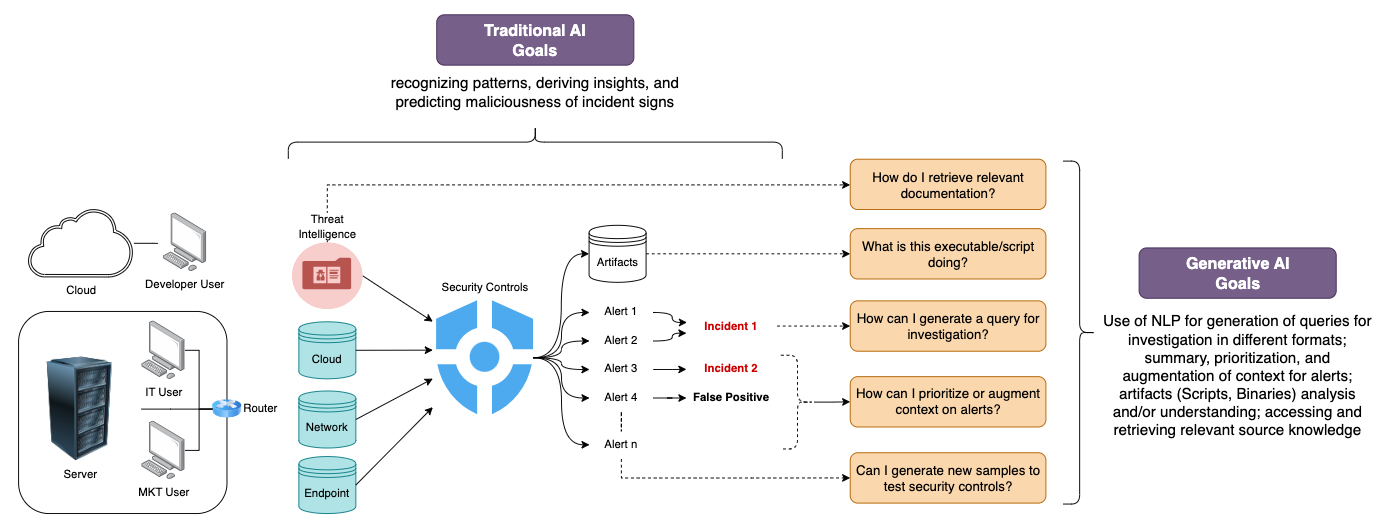

Detection and analysis of incident signs involve a variety of activities such as the ones described in figure 3 below. Usually, an organization hires professionals with the right skills to perform them. However, the implementation of AI techniques may also help the effective completion of these activities.

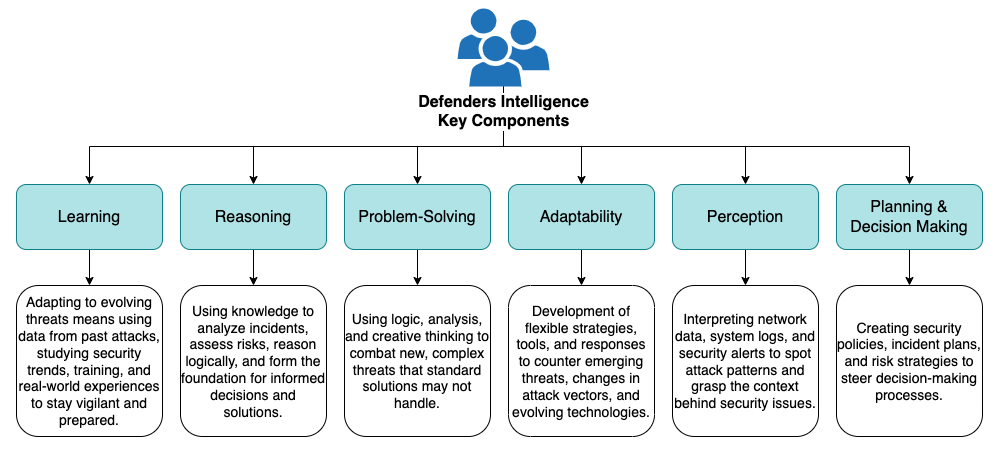

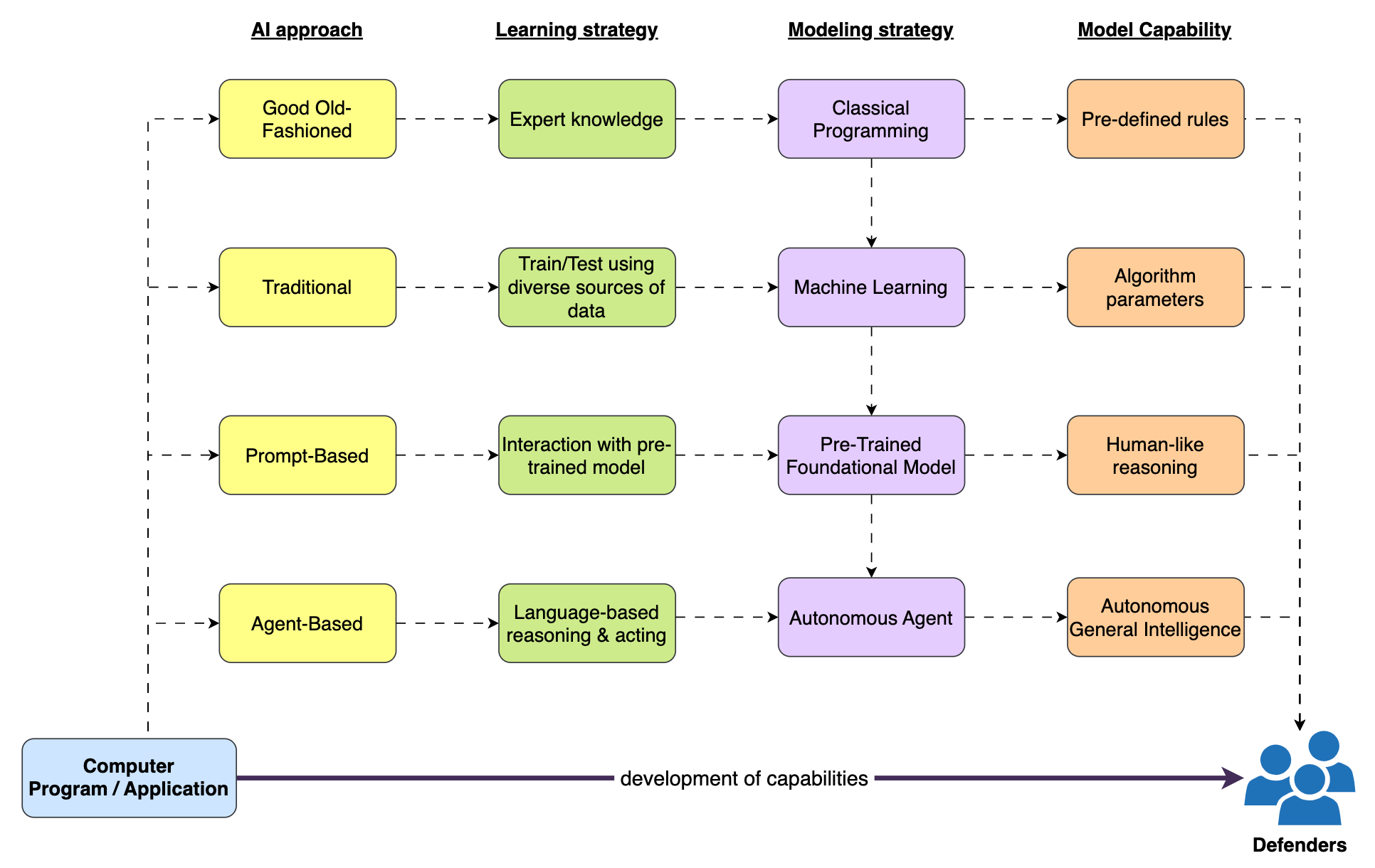

An AI approach refers to computer programs or applications that acquire capabilities to emulate defensive professionals' intelligence in order to support threat detection, prevention, and response capabilities.

Acquiring Defender Intelligence Capabilities

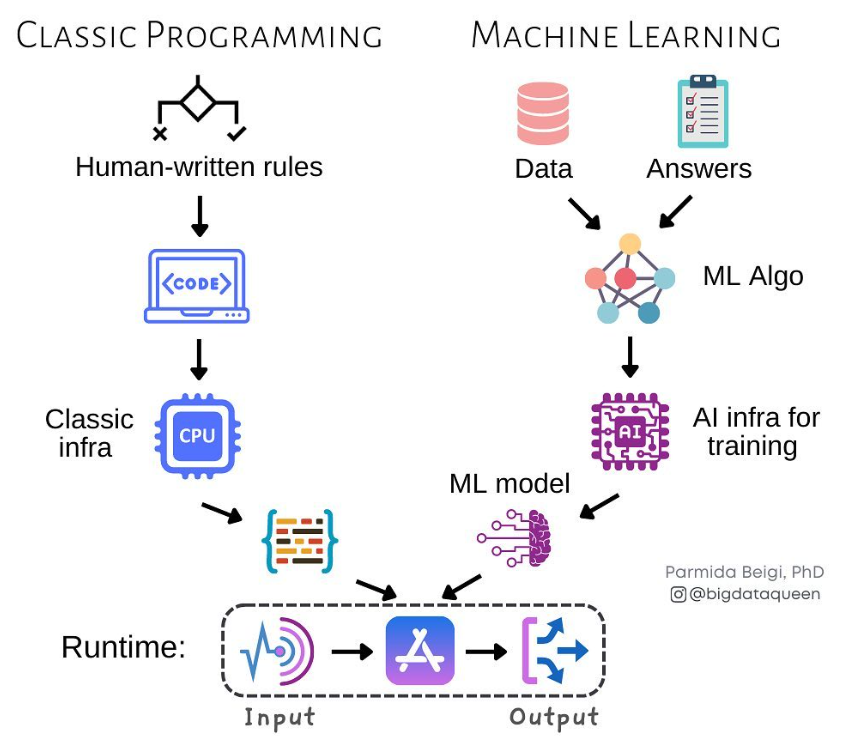

Over the years, AI advancements have enabled machines to perform complex tasks, mirroring the capabilities of a human defender. This evolution began with basic rule-based methods, then progressed to deriving rules from data. The major breakthrough in AI came from a combination of factors: improved algorithms, enhanced computing power, access to vast amounts of data, and the rise of practical applications. Among these, the development of better computing capabilities and the introduction of neural networks were particularly transformative. These technologies enabled machines to interpret complex data patterns and make autonomous decisions.

Good old-fashioned AI (GOFAI)

When using this approach, defenders' expert knowledge is represented in pre-defined rules that allow an organization to define a baseline to search for specific patterns and characteristics in the data. Good examples of this approach are Yara, Sigma, intrusion detection systems (IDS), and access control systems.

Traditional AI

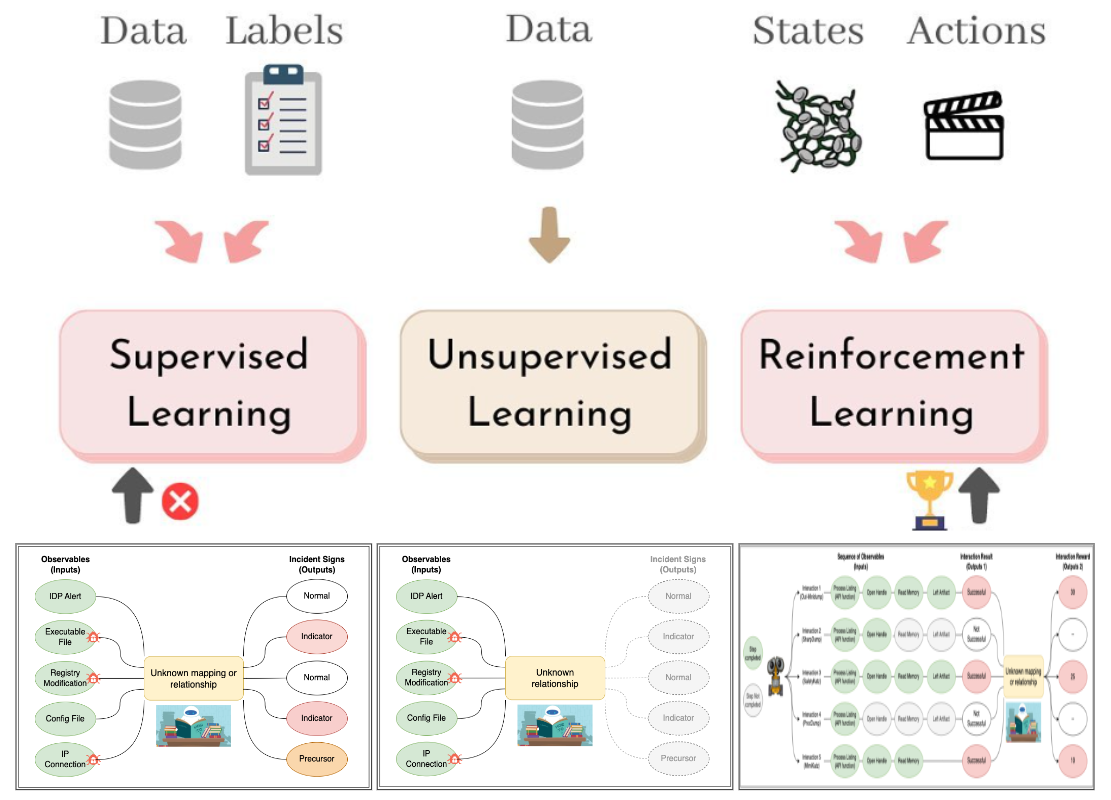

One of the limitations of working with rule-based AI applications is that when a threat actor introduces a new tactic, technique, and procedure (TTP) or variation of an existing one, it often means we need to update our rules to keep up. That's where traditional AI or machine learning comes in. It allows computers to learn from cybersecurity data more flexibly, understanding complex patterns and making decisions on their own.

Machine learning algorithms continually improve with training. For instance, with labeled software samples, machines can learn to identify malicious content in a supervised way. Without labels, they use unsupervised learning to identify unusual behaviors or clusters of activity that may indicate a threat.

Reinforcement learning presents another valuable method. Unlike traditional data-driven learning, it involves a computer program interacting with a network, whether real or virtual, to complete specific tasks. The program gets feedback as rewards, which helps it learn the most effective techniques and adapt to find the best strategies in a given environment.

Traditional AI: Natural Language Processing

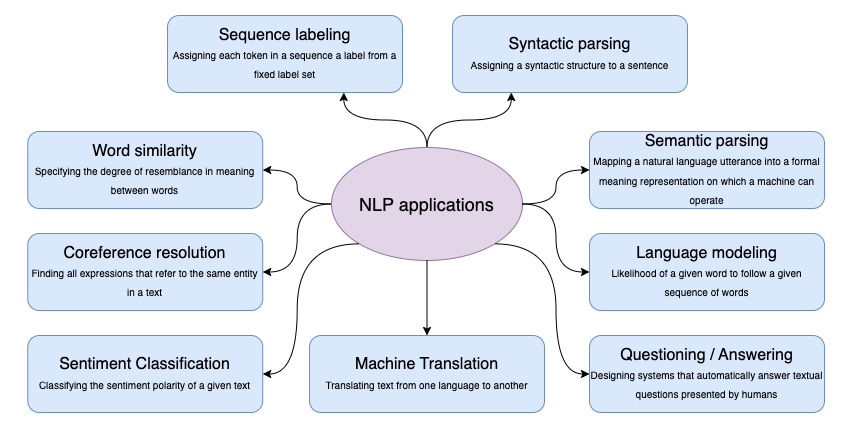

Most of the time, when training and testing machine learning models, we prefer input data organized as a table or data frame where every column describes a feature or variable and every rows represents and observation collected from our network environment. However, this is not the only format data may be collected. Understanding, interpreting, generating, and responding to human language (text, voice, image), for example, is also an interesting research topic for cyber defensive professionals.

By using natural language processing (NLP) techniques, a defender can, for example, identify suspicious and malicious communications such as social media messages and emails, translate and summarize threat intelligence reports, group or cluster documents that may have similar content to prioritize its processing, and be assisted by chatbots that can process and summarize multiple and diverse sources of threat intelligence.

Prompt-based AI

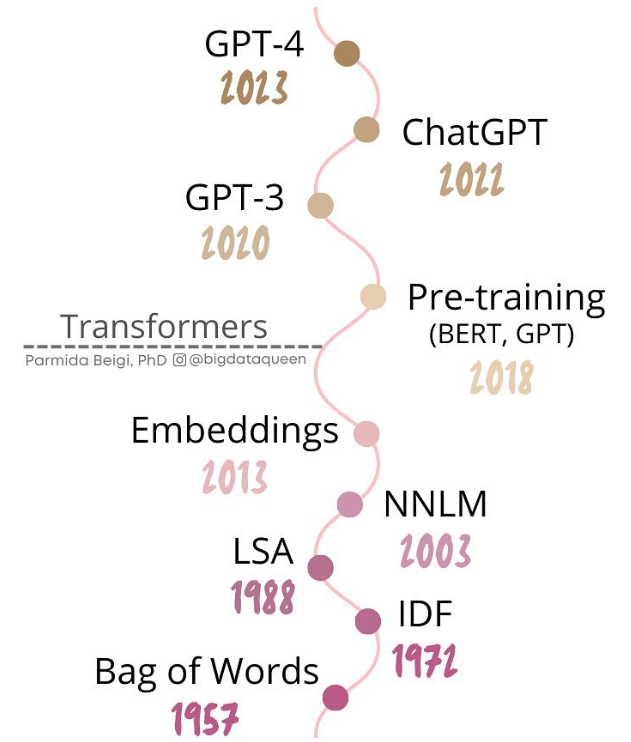

A subfield of NLP that is getting a lot of interest within the cybersecurity field is language modeling, where a probability value is assigned to word sequences to find the most likely next element (Generative) or the most likely categorization/answer (Discriminative). Since the beginning, one of the limitations of NLP techniques was the processing of large corpus of data. This changed with the introduction of transformers and the concept of attention because they allowed a faster and more efficient training process of models (Vaswani et al., 2017).

Attention-based models enabled training of large-scale language models on vast amounts of data to impart general language understanding and knowledge. This models are known as large language models (LLMs). Though it is not the only way to interact with LLMs, the most prevalent method that cyber defense professionals can use to interact with LLMs are prompts because of its flexibility and simplicity. This is known as prompt-based AI.

By interacting with LLMs through prompts, a defender can be assisted when responding to an incident, analyzing vast amounts of threat intelligence data, generating incident reports, malware content analysis, detection queries generation or translation, and developing security policies and procedures.

Agent-based AI

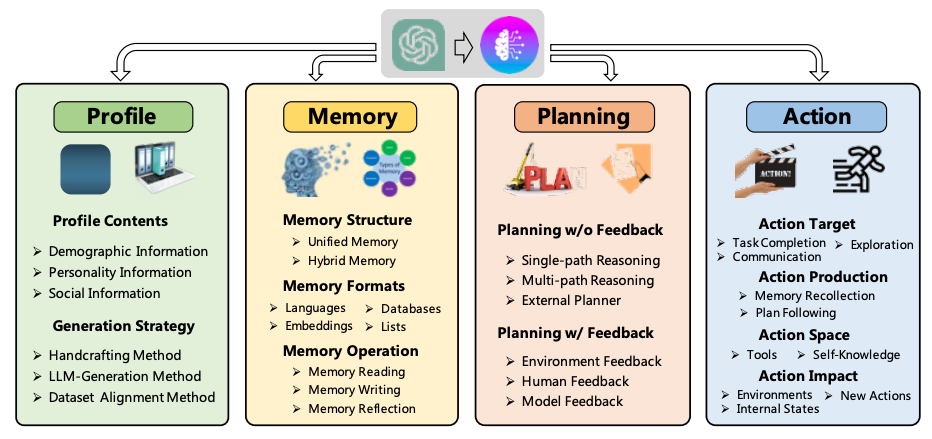

While the initial pre-training of an LLM establishes a solid foundation for defenders to be able to interact with it and ask questions, its capabilities often extend beyond only generating content for users. An LLM can also reason over tasks and help defenders logically figure out situations using natural language. Even though this sounds great, an LLM needs to do more than reasoning; it needs to act. This is where agents step in. Agents help LLMs connect with the outside world to understand the core intent behind a defender's prompt, enabling the selection and execution of appropriate actions (Shah, 2023). This is known as agent-based AI. It’s about building smart systems that boost the thinking power of LLMs, enabling them to make decisions and act independently in different scenarios (Wang et al., 2023).

Figure 10 describes the basic architecture of an autonomous agent. The profiling module aims to determine the function of the agent. Meanwhile, the memory and planning modules immerse the agent in a changing environment, allowing it to remember past actions and strategize for future ones. The action module then translates the agent’s choices into distinct outcomes (Wang et al., 2023).

Announcing Blog Series 💜

This blog post is just the beginning and wasn't intended to dive into every detail or the many AI methods in development and study. So far we've highlighted four key approaches to showcase the evolution and variety of AI techniques applied to cybersecurity. As the AI landscape is changing rapidly, it's crucial to understand where each type - traditional AI (TAI) and generative AI (GenAI) - currently excels, how they complement each other, and why TAI still plays a vital role in the core detection capabilities of enterprises.

In the upcoming blog series, I'll delve deeper into several core techniques and concepts behind both Good old-fashioned AI (GOFAI) and TAI. This exploration will help us understand the benefits and applications of GenAI in cybersecurity.

Buckle Up & Get Ready!!

Thank you for reading this first blog post! In our next post, we are going to introduce the topic of anomaly detection and basic terminology that will be helpful during the whole series. I'll take a closer look at various algorithms used to identify unusual Windows logon sessions. We'll explore key concepts like probability distribution, descriptive statistics, and data normalization. Plus, we'll touch on more complex topics such as autocorrelation, multicollinearity, reducing data dimensions, how to measure similarity, the basics of perceptrons and neural networks, and the process of encoding and decoding data.

References

- Amazon Web Services. (2023, January 1). Use Indicators of Compromise (IOCs) to Detect Security Incidents. Contributors: Anna McAbee, Freddy Kasprzykowski, Jason Hurst, Jonathon Poling, Josh Du Lac, Paco Hope, Ryan Tick, Steve de Vera. https://docs.aws.amazon.com/whitepapers/latest/aws-security-incident-response-guide/use-indicators-of-compromise.html

- Beigi, P. [bigdataqueen]. (2022, September 6). Artificial Intelligence Explained - Part 3 [Instagram post]. Instagram. https://www.instagram.com/p/CiJzFjVOTvm/

- Beigi, P. [bigdataqueen]. (2023, April 25). Machine Learning Paradigms [Instagram post]. Instagram. https://www.instagram.com/p/CrcwtbousPj/

- Beigi, P. [bigdataqueen]. (2023, February 1). What is artificial intelligence? [Instagram post]. Instagram. https://www.instagram.com/p/CoJbKjwOHW4/

- Beigi, P. [bigdataqueen]. (2023, March 25). Natural Language Processing - A High Level History [Instagram post]. Instagram. https://www.instagram.com/p/Cp_71-KupJ_/

- Cichonski, P., Millar, T., Grance, T., & Scarfone, K. (2012, August). Computer Security Incident Handling Guide (NIST Special Publication 800-61, Rev. 2). Retrieved from https://nvlpubs.nist.gov/nistpubs/specialpublications/nist.sp.800-61r2.pdf

- Dror, Rotem., Peled-Cohen, Lotem., Shlomov, Segev., & Reichart, Roi. (2020). Statistical Significance Testing for Natural Language Processing (1st ed. 2020.). Springer International Publishing. https://doi.org/10.1007/978-3-031-02174-9

- James, Gareth., Witten, Daniela., Hastie, Trevor., Tibshirani, Robert., & Taylor, Jonathan. (2023). An Introduction to Statistical Learning with Applications in Python (1st ed. 2023.). Springer International Publishing. https://doi.org/10.1007/978-3-031-38747-0

- Sugiyama, M. (2015). Statistical Reinforcement Learning: Modern Machine Learning Approaches (1st ed.). Chapman and Hall/CRC. https://doi.org/10.1201/b18188

- Wang, L., Chen, M., Feng, X., Zhang, Z., Yang, H., Zhang, J., Chen, Z., Tang, J., Chen, X., Lin, Y., Wayne Xin Zhao, Wei, Z., & Ji-Rong, W. (2023). A Survey on Large Language Model based Autonomous Agents. ArXiv.Org. https://doi.org/10.48550/arxiv.2308.11432

- Zhai, Chengxiang. (2009). Statistical Language Models for Information Retrieval (1st ed. 2009.). Springer International Publishing. https://doi.org/10.1007/978-3-031-02130-5

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017). Attention Is All You Need. ArXiv.Org. https://doi.org/10.48550/arxiv.1706.03762

- Shah, A. (Speaker). (2023, December 19). LLM Agent Fine-Tuning: Enhancing Task Automation with Weights & Biases [Video]. DeepLearningAI. Retrieved from https://www.youtube.com/live/L8B_g2JOQAs?si=-M5rhVrdHNOYncz-