Rise of the Planet of the Agents 🤖: Creating an LLM-Based AI Agent from Scratch!

Ever since I began learning about Generative Artificial Intelligence (GenAI), I've been eager to explore beyond just building AI chatbots for simple question-answering over documents. This curiosity led me to experiment with various LLMs, and through this process, I learned about their potential not only for recalling information but also for reasoning in natural language and enabling autonomous decision-making. This led me into the world of AI autonomous agents. Despite AMAZING libraries like LangChain or LlamaIndex simplifying their creation, I wanted to understand the internals. We all learn in different ways, and for me, that means writing things from scratch. So, I knew I had to write my first agent from scratch, learning step by step through the process.

In this blog post, I share my journey writing my own agent in Python and explore the fundamental concepts behind LLM-based AI autonomous agents. If you're new to the world of GenAI, I highly recommend reading my other post, "Demystifying Generative AI 🤖 A Security Researcher's Notes", to familiarize yourself with the basic terminology and concepts for interacting with LLMs.

What is an Agent? 🐶

In the simplest terms, an agent is anything that can act on its own or make decisions. This could be a person, an animal, or any entity that has the autonomy to choose and act independently.

What is an AI Agent? 🤖

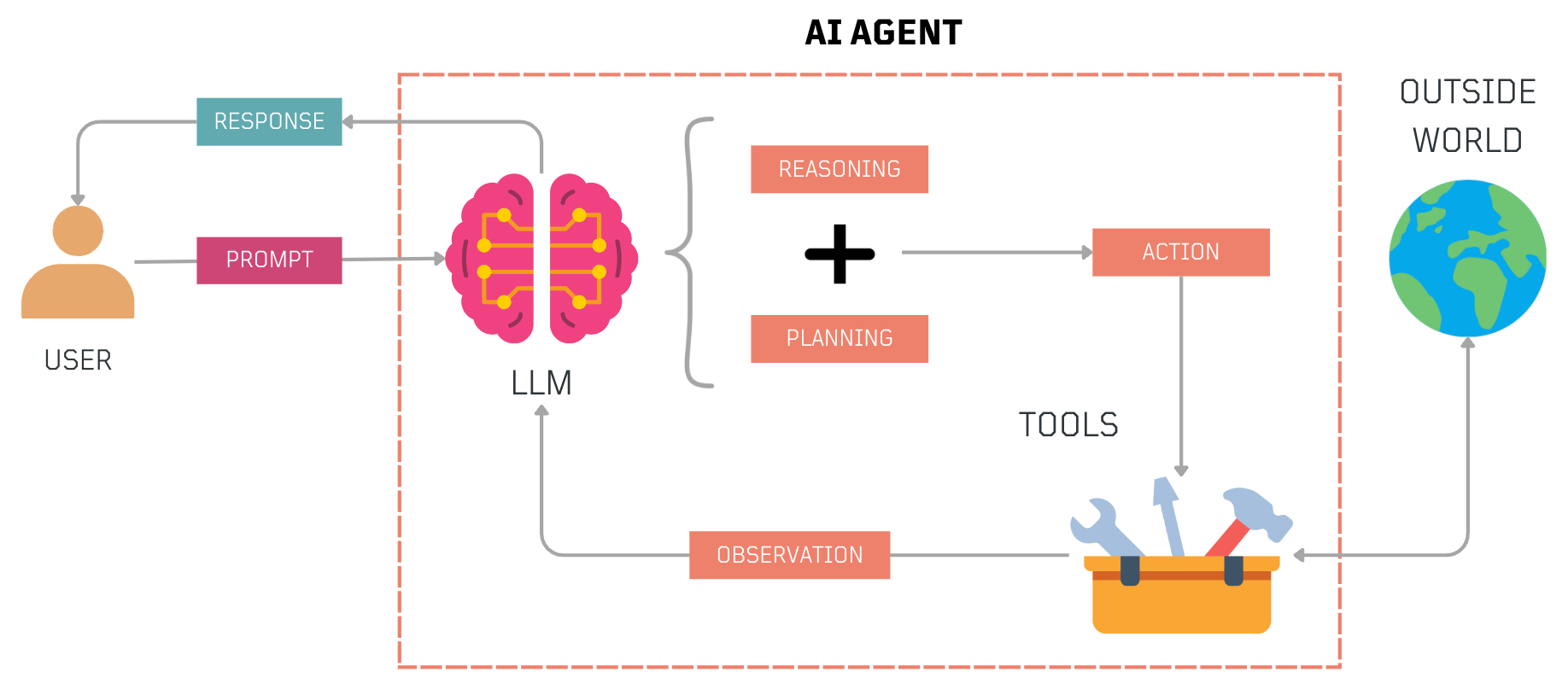

An AI agent is a computer program or machine that can act on its own, making decisions similar to how humans do. It uses data and AI to think through tasks and decides the best action to take. In addition, it can use tools to execute actions and gather new information, enhancing its decision-making process.

What is an LLM-Based AI Agent? 🤖➕🧠

An LLM-Based AI Agent uses an LLM as a reasoning engine to think through complex tasks in natural language. Its actions are not hardcoded; instead, it determines its own steps based on the user's input and past interactions. This agent combines language-based planning with actions to interact with the outside world in a non-deterministic way, much like how humans operate.

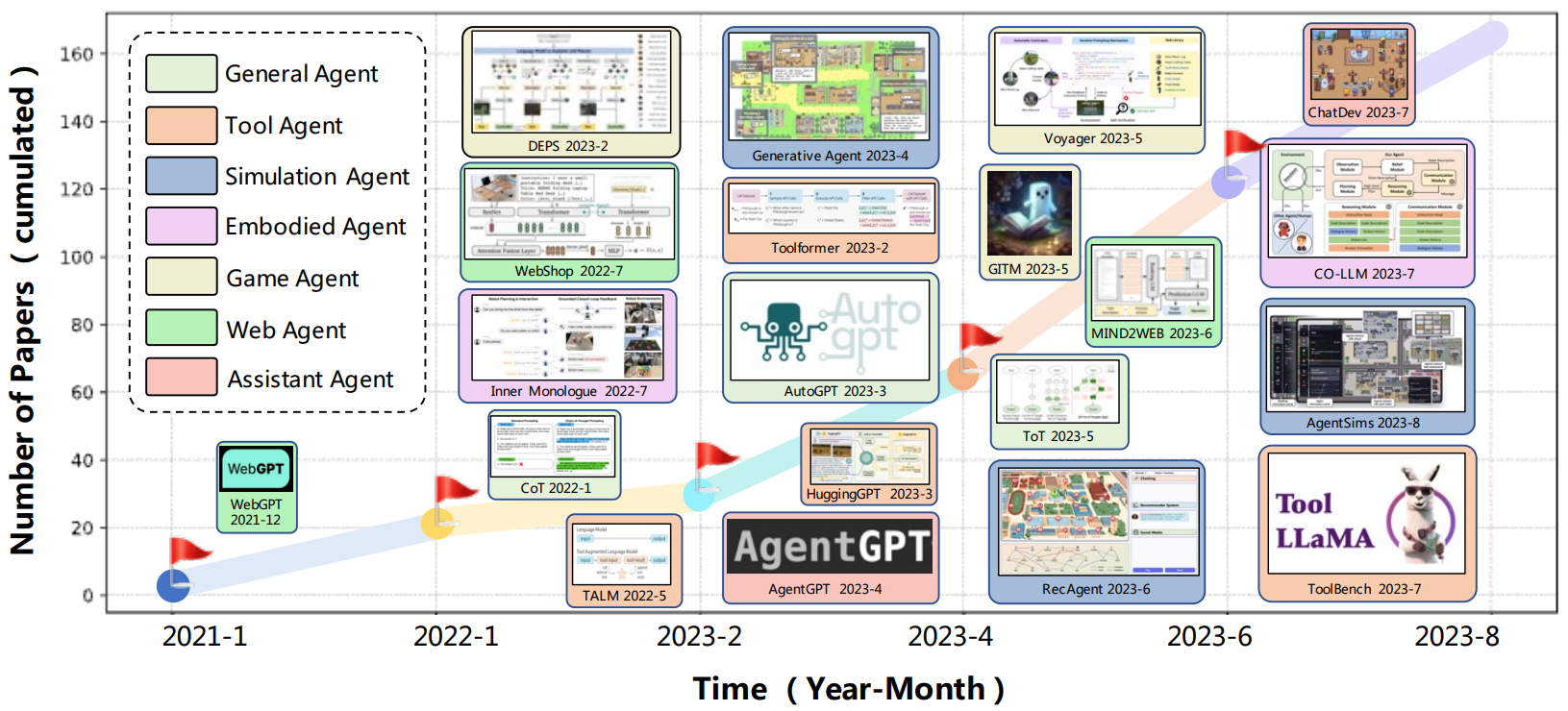

This is not a new concept; from January 2021 to August 2023, there has been a noticeable increase in research papers on this topic.

A Reasoning Engine? 🎮

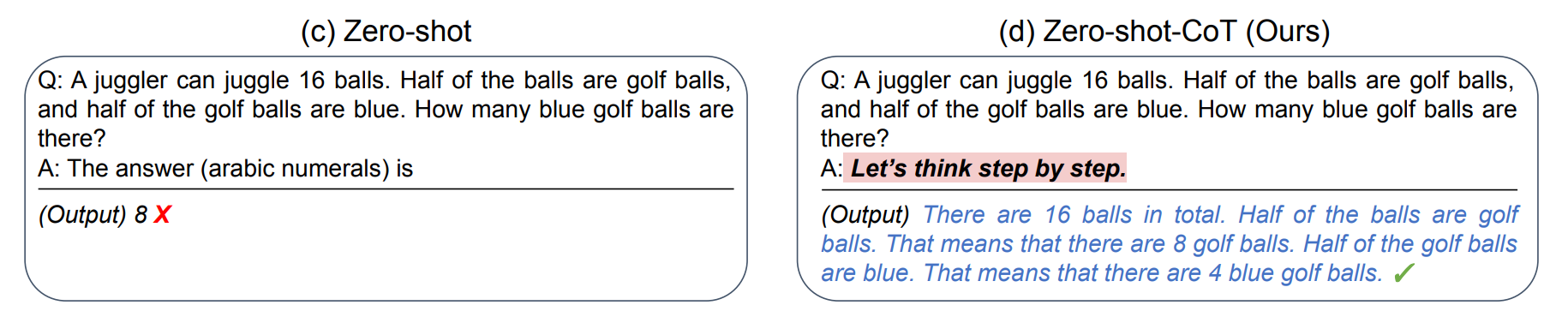

Reasoning is the process of thinking through a problem or situation logically. It's how we figure things out, whether it is solving a puzzle or deciding what to do in a particular situation. Reasoning involves analyzing information, evaluating options, and choosing the best course of action based on that analysis.

In an LLM-based AI agent, this ability to reason can be activated by prompts such as Let's think step by step" or by providing examples of intermediate reasoning steps. This approach enables the agent to break down a task into smaller parts and methodically work through each one before coming up with the best answer.

Is Reasoning Enough? 🤔

After reading about LLMs and their ability to reason, I got curious about how an agent would interact with the real world. How would an agent validate its reasoning or learn new things from its environment? Can it reason reactively or update its knowledge? An Agent needs to do more than just think; it needs to act.

This integration of thought and action is essential for AI agents to interact more effectively with their environments. It's not just about processing information but also about implementing decisions based on reasoning.

Anatomy of an LLM-based AI Agent 🤖

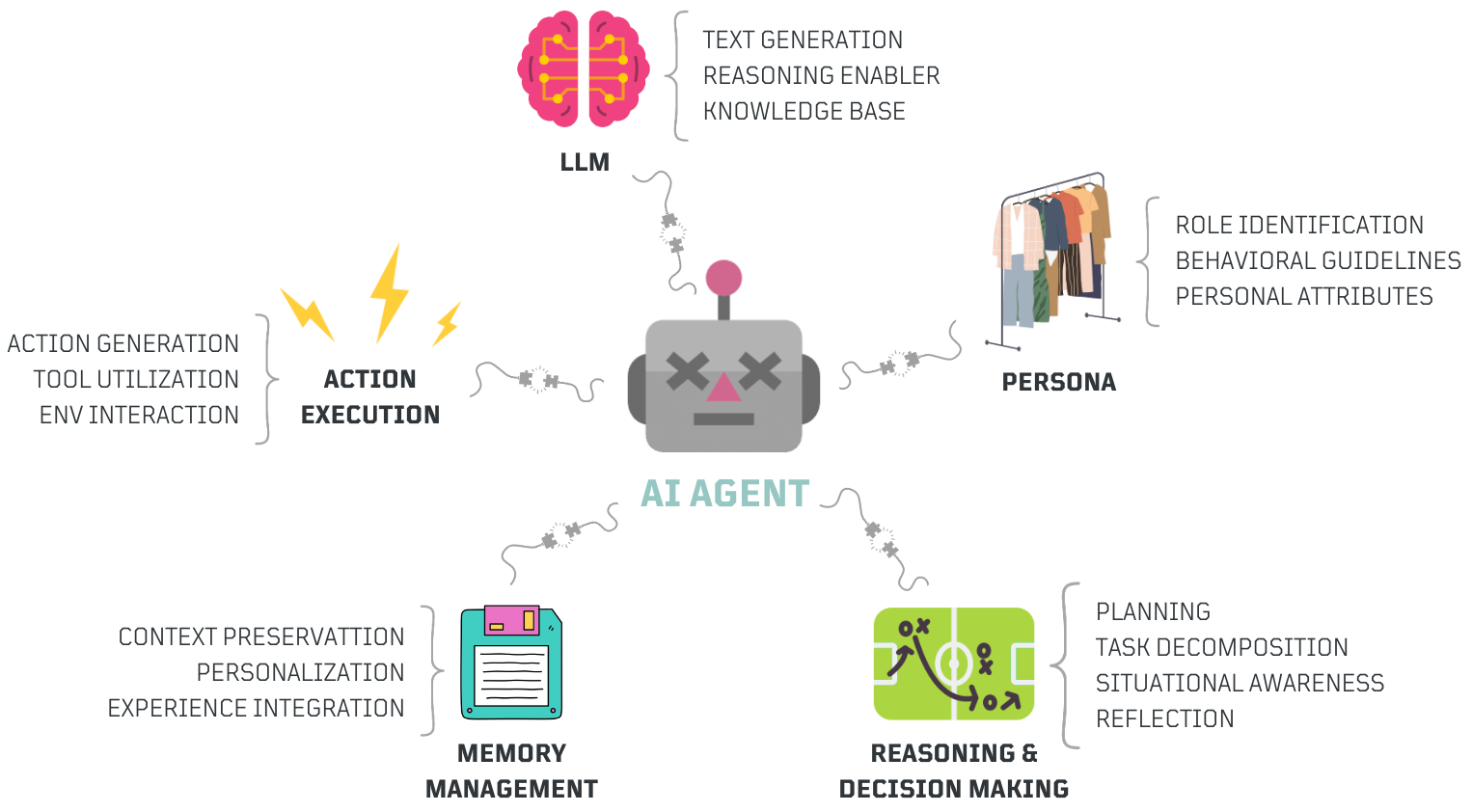

After exploring the fundamental concepts of agent reasoning and action, I wanted to outline the key components essential for building a functional LLM-based AI agent. These components form the backbone of how the agent processes information, makes decisions, and interacts with its environment.

- LLM Core

- Produces coherent, relevant text.

- Facilitates complex reasoning and problem-solving.

- Integrates pre-trained knowledge to inform decisions.

- Persona

- Assigns specific roles to guide the agent's behavior.

- Defines personality traits and instructions.

- Additional attributes shaping agent's identity.

- Memory Management

- Maintains relevant context across interactions.

- Customizes responses based on user preferences.

- Leverages past interactions for better responses.

- Reasoning and Decision-Making

- Formulates step-by-step strategies to achieve goals.

- Simplifies complex tasks into sub-tasks.

- Adapts to current environment.

- Evaluates past actions to refine future strategies.

- Action Execution

- Creates specific actions based on decisions and plans.

- Employs external tools to enhance action effectiveness.

- Interacts with environment to execute tasks and get feedback.

Designing a Functional LLM-based AI Agent 🤖

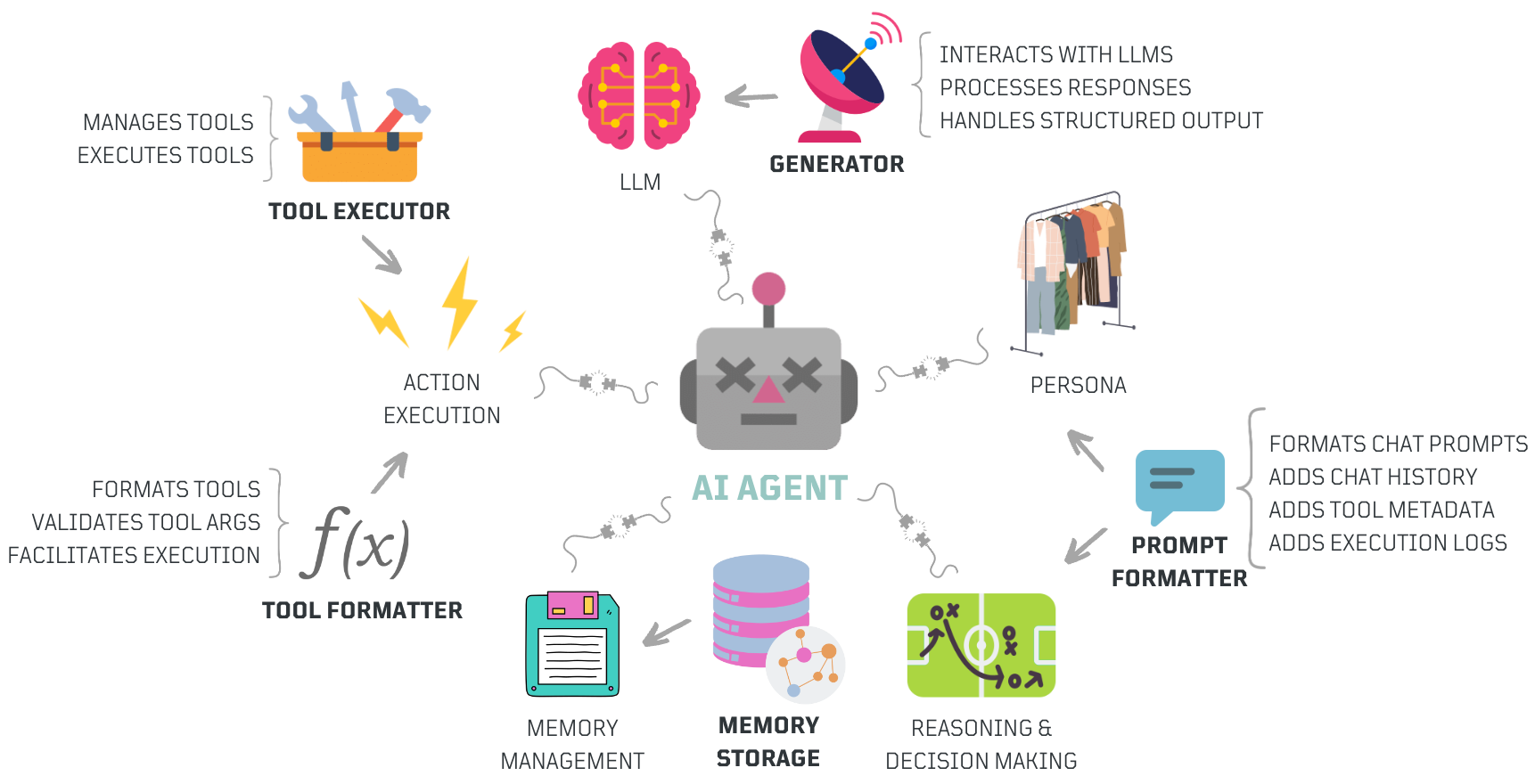

After documenting the key components of an AI agent, it was time to write some code. For my initial basic design, I needed a client to communicate with LLMs, a system to store and manage memory, tools and mechanisms for execution, and a way to define plans and personas.

- Generator: A client to interact with LLMs, primarily for generating text. It validates user input and processes structured responses.

- Memory: Enables both short-term and long-term memory management. This system can range from simple lists to advanced vector or graph databases, storing and retrieving interaction history as needed.

- Tool Formatter: Uses a decorator to format generic Python functions into a structured format that aligns with the AI agent's requirements for tool invocation. Remember, LLMs do not invoke tools, agents do.

- Tool Executor: Manages tool registration, maintains a catalog, and ensures tools are available and executed correctly.

- Prompt Formatter: Prepares prompt templates with chat history, tool details, and execution logs to ensures all essential information is included for effective reasoning and decision-making.

- AI Agent: Integrates all components, orchestrates their functions, and manages the agent's workflow. It initializes the agent, processes prompt templates, and uses the tool executor to execute actions.

The Agentic Journey Begins! 🧭

Let's now dive into the journey of creating an AI agent from scratch, exploring key components, challenges, and fundamental concepts along the way.

The Agent Base Class 🤖

The first component I defined was the Agent class itself. This foundational class was designed to be flexible and extensible, serving as a template for integrating more complex features as development progressed.

class Agent:

"""

Integrates LLM client, tools, and memory.

"""

def __init__(self, llm_client=None, tools=None, memory=None):

self.llm_client = llm_client

self.tools = tools

self.memory = memory

def run(self):

"""

Placeholder for the agent's main execution logic.

"""

passBuilding the LLM Client 📡

Next, I created a class to interact with LLMs, primarily from OpenAI, due to their models' performance. I'll cover local LLMs in a future post.

The OpenAI Chat Completion Class

This class interacts with the OpenAI Chat completion API, sending messages and parsing responses to handle various message types effectively.

from typing import Dict, Any, List

import openai

class OpenAIChatCompletion:

"""

Interacts with OpenAI's API for chat completions.

"""

def __init__(self, model: str, api_key: str = None, base_url: str = None):

"""

Initialize with model, API key, and base URL.

"""

self.client = openai.OpenAI(api_key=api_key, base_url=base_url)

self.model = model

def generate(self, messages: List[Dict], **kwargs) -> Dict[str, Any]:

"""

Generate a response from input messages.

"""

params = {'messages': messages, 'model': self.model, **kwargs}

response = self.client.chat.completions.create(**params)

return response.choices[0].message🚦Assembly Line: Integrating LLM Client with AI Agent 🤖

This enables the agent to leverage the LLM client for generating responses.

class Agent:

"""

Integrates LLM client, tools, and memory.

"""

def __init__(self, llm_client, tools=None, memory=None):

self.llm_client = llm_client

self.tools = tools

self.memory = memory

def run(self, messages: List[Dict[str, str]]):

"""

Generates a response from the LLM client.

"""

# Generate response using the LLM client

response = self.llm_client.generate(messages)

return responseTesting the Updated Agent Class 🔬

To securely handle the API key, set an OPENAI_API_KEY variable in a .env file and use Python-dotenv to load it as an environment variable. The OpenAI library will automatically detect and use this variable.

from dotenv import load_dotenv

load_dotenv() # Load environment variables from .env.Next, initialize the new class, define a few messages, and run the agent:

# Initialize the OpenAI client

client = OpenAIChatCompletion(base_url='https://api.openai.com/v1', model='gpt-4')

# Define a set of messages

messages = [

{"role": "system", "content": "You are a security assistant."},

{"role": "user", "content": "Hey! This is Roberto!"}

]

# Initialize the agent with the LLM client

myAgent = Agent(llm_client=client)

# Use the agent to run a task

response = myAgent.run(messages=messages)

print(response)ChatCompletionMessage(content='Hello Roberto! How can I assist you today regarding security matters?', role='assistant', function_call=None, tool_calls=None)

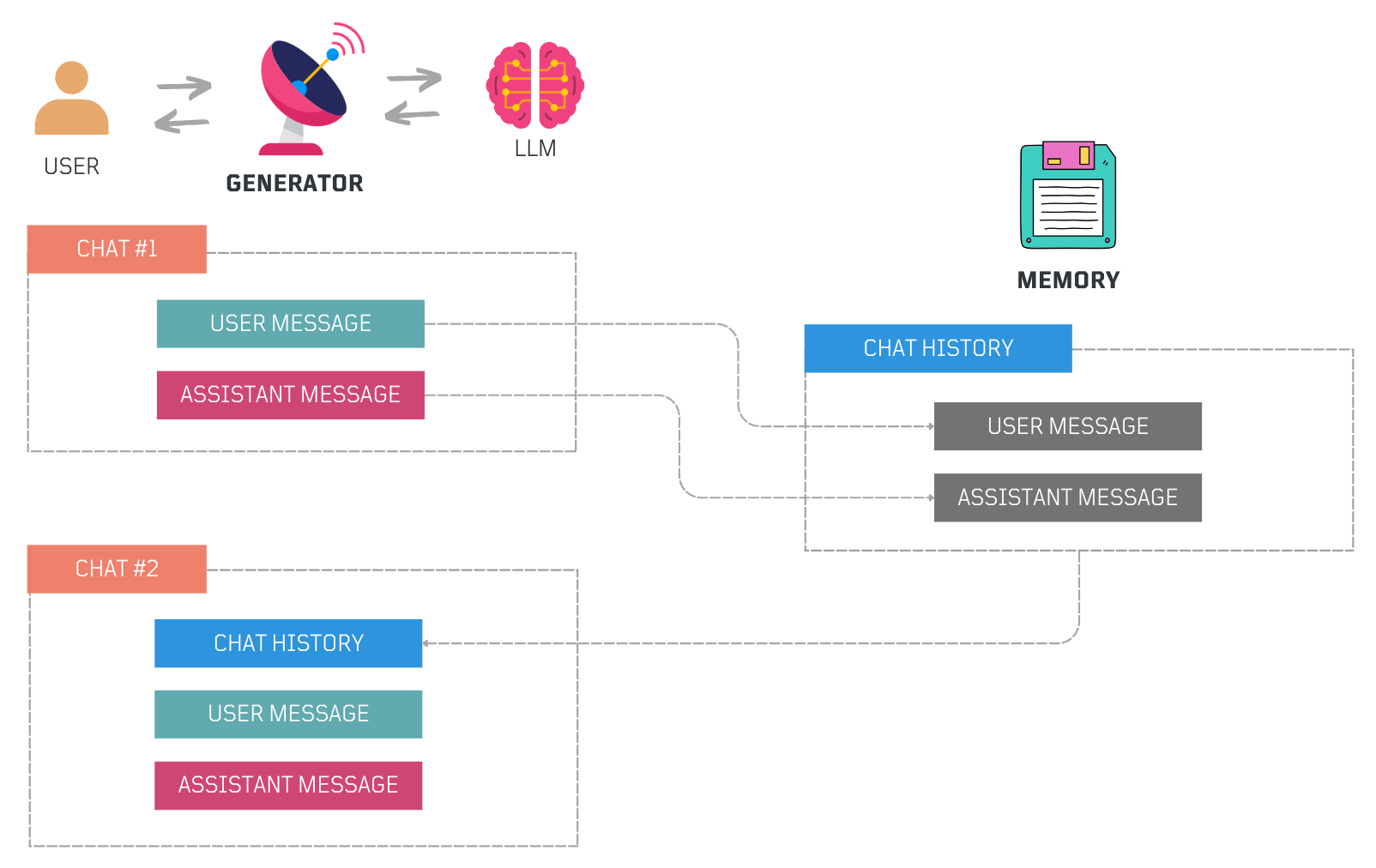

Implementing Short-Term Memory 💾

After setting up the LLM client, I focused on enabling the agent to recall previous interactions. Short-term memory handles recent conversations within the LLM's context window, while long-term memory uses databases like vector stores or graphs for retrieving extensive chat history, ensuring relevant context.

The Short-Term Memory Class

This class manages a list of chat messages and access to recent interactions.

from typing import List, Dict

class ChatMessageMemory:

"""Manages conversation context."""

def __init__(self):

self.messages = []

def add_message(self, message: Dict):

"""Add a message to memory."""

self.messages.append(message)

def add_messages(self, messages: List[Dict]):

"""Add multiple messages to memory."""

for message in messages:

self.add_message(message)

def add_conversation(self, user_message: Dict, assistant_message: Dict):

"""Add a user-assistant conversation."""

self.add_messages([user_message, assistant_message])

def get_messages(self) -> List[Dict]:

"""Retrieve all messages."""

return self.messages.copy()

def reset_memory(self):

"""Clear all messages."""

self.messages = []🚦Assembly Line: Integrating Memory with AI Agent 🤖

With the LLM client and memory class ready, the next step was to integrate memory into the Agent class. The agent initializes with the system message, and user messages are added to memory before merging. This allows efficient generation, storage, and retrieval of conversation context.

class Agent:

"""Integrates LLM client, tools, and memory."""

def __init__(self, llm_client, system_message: Dict[str, str], tools=None):

self.llm_client = llm_client

self.tools = tools

self.memory = ChatMessageMemory()

self.system_message = system_message

def run(self, user_message: Dict[str, str]):

"""Generate a response using LLM client and store context."""

self.memory.add_message(user_message)

chat_history = [self.system_message] + self.memory.get_messages()

response = self.llm_client.generate(chat_history)

self.memory.add_message(response)

return responseTesting the Updated Agent Class 🔬

# Test the updated agent

client = OpenAIChatCompletion(base_url='https://api.openai.com/v1', model='gpt-4')

# Define the system message

system_message = {"role": "system", "content": "You are a security assistant."}

# Initialize the Agent with the LLM client and system message

agent = Agent(llm_client=client, system_message=system_message)

# Define a user message

user_message = {"role": "user", "content": "Hey! This is Roberto!"}

# Generate a response using the agent

response = agent.run(user_message)

# Add a follow-up message to test memory integration

follow_up_message = {"role": "user", "content": "What was my name?"}

# Run the agent again with the new message

response = agent.run(follow_up_message)

print(response)ChatCompletionMessage(content='Your name is Roberto.', role='assistant', function_call=None, tool_calls=None)

Enabling Tool Execution v1 🛠️

After setting up LLM interaction and memory integration, the next step was enabling tool use. The goal was for the LLM to help the agent choose the right tools based on the task and provide the correct inputs for each tool. This ensures the agent selects and executes tools effectively.

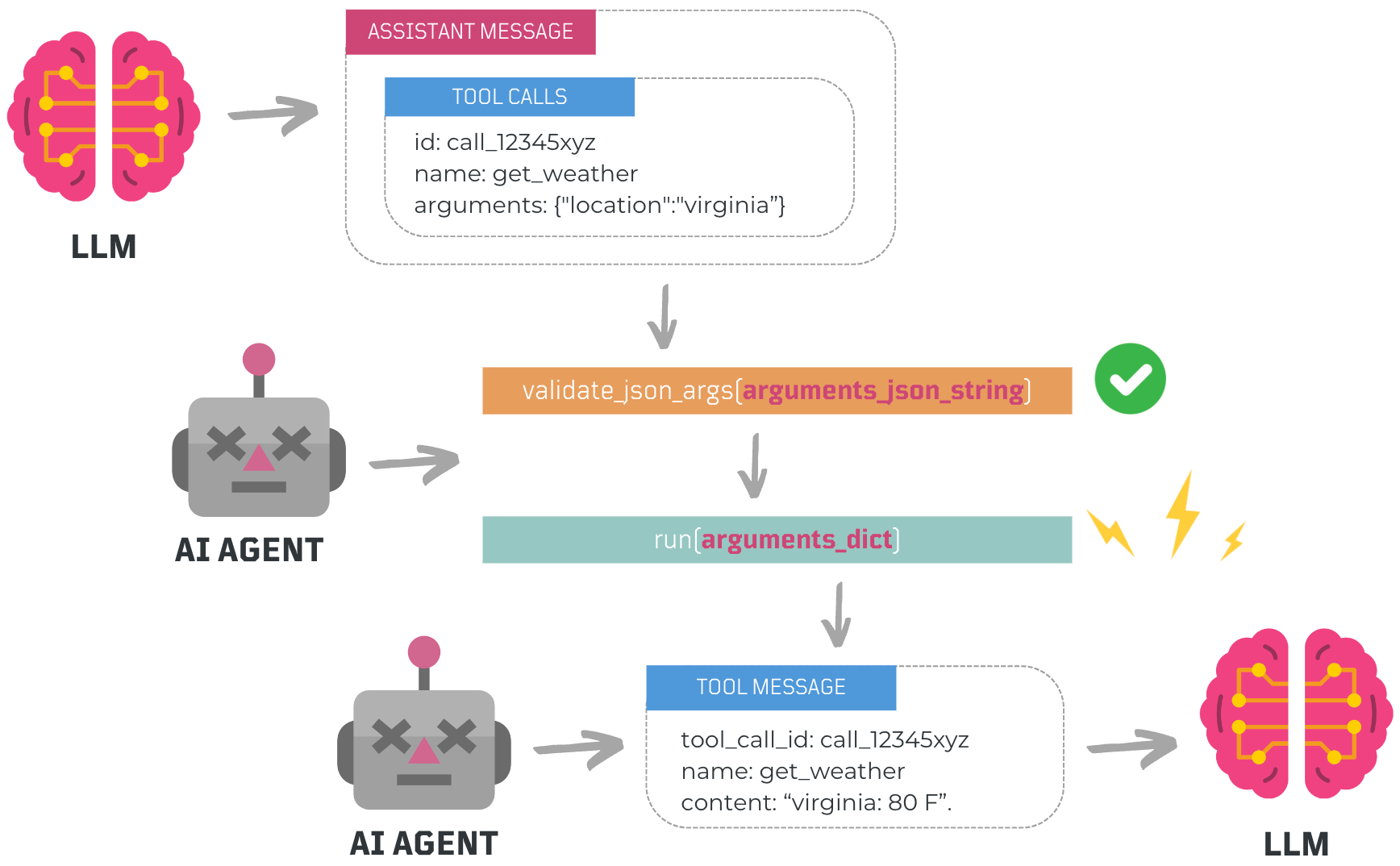

Selecting the Right Tool Via OpenAI Function Calling Capabilities 💫

One reliable method for choosing tools and returning structured outputs is OpenAI's Function Calling. Introduced on June 13, 2023, this feature allows developers to describe functions to models trained to generate a JSON object with the necessary arguments based on user input.

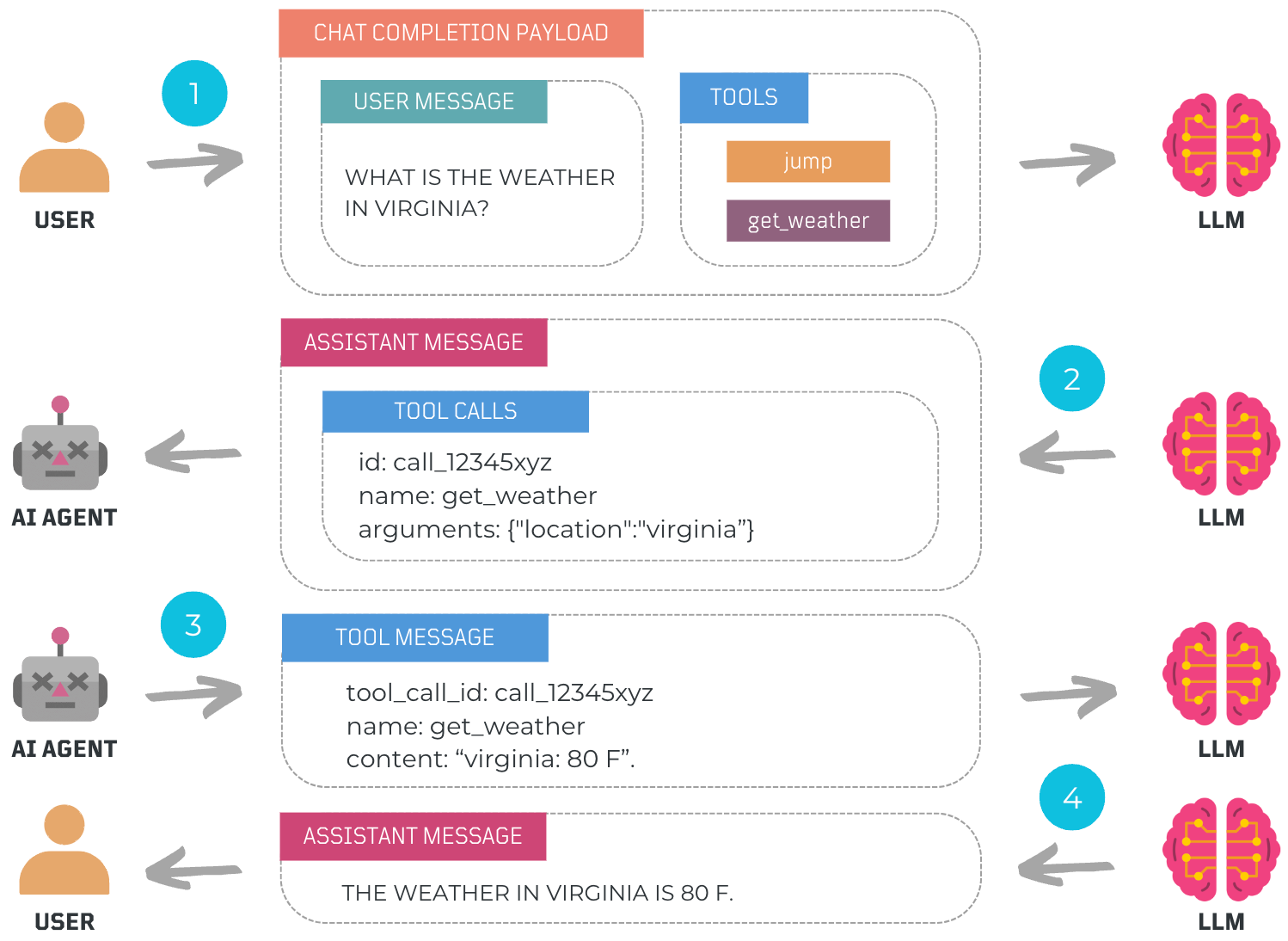

Let's see how this works:

- The user submits a query specifying a task and the available tools.

- The LLM selects the right tool for the task, supplying a unique ID, tool name, and tool arguments as a JSON string for the agent to execute.

- The AI agent parses the JSON string, executes the tool with the provided arguments, and submits the results back as a

Toolmessage. - Finally, the LLM summarizes the tool execution results within the user's context delivering a comprehensive final response.

Testing OpenAI's Function Calling Capability 🔍

1️⃣ Update Open AI Chat Completion Client

First, I needed to update the client to accept an additional tools parameter, allowing users to pass the tool details along with the user message.

from typing import Dict, Any, List

import openai

class OpenAIChatCompletion:

"""Interacts with OpenAI's API for chat completions."""

def __init__(self, model: str, api_key: str = None, base_url: str = None):

self.client = openai.OpenAI(api_key=api_key, base_url=base_url)

self.model = model

def generate(self, messages: List[str], tools: List[Dict[str, Any]] = None, **kwargs) -> Dict[str, Any]:

"""Generates a response from OpenAI's API."""

params = {'messages': messages, 'model': self.model, 'tools': tools, **kwargs}

response = self.client.chat.completions.create(**params)

return response.choices[0].message2️⃣ Update Agent Class to Integrate Tools

Next, I updated the Agent class to pass tools to the LLM client.

from typing import Dict

class Agent:

"""Integrates LLM client, tools, and memory."""

def __init__(self, llm_client, system_message: Dict[str, str], tools=None):

self.llm_client = llm_client

self.tools = tools

self.memory = ChatMessageMemory()

self.system_message = system_message

def run(self, user_message: Dict[str, str]):

self.memory.add_message(user_message)

chat_history = [self.system_message] + self.memory.get_messages()

response = self.llm_client.generate(chat_history, tools=self.tools)

self.memory.add_message(response)

return response3️⃣ Define Dummy Python Functions

I defined a few dummy Python functions to test these capabilities.

def get_weather(location: str) -> str:

"""Gets weather information."""

return f"{location}: 80F."

def jump(distance: str) -> str:

"""Jumps a specific distance."""

return f"I jumped the following distance {distance}"4️⃣ Define the Python Function Signature as OpenAI Function Calls

I then defined a dictionary for each tool following the expected schema.

get_weather_func_dict = {

'type': 'function',

'function': {

'name': 'get_weather',

'description': 'Get weather information based on location.',

'parameters': {

'properties': {'location': {'type': 'string'}},

'required': ['location'],

'type': 'object'

}

}

}

jump_func_dict = {

'type': 'function',

'function': {

'name': 'jump',

'description': 'Jump a specific distance.',

'parameters': {

'properties': {'distance': {'type': 'string'}},

'required': ['distance'],

'type': 'object'

}

}

}5️⃣ Initialize Agent and Test It.

I then initialized the client to generate a response from the LLM, including tools.

client = OpenAIChatCompletion(base_url='https://api.openai.com/v1', model='gpt-4')

# Define the system message

system_message = {"role": "system", "content": "You are a helpful assistant."}

# Gather all available tools

tools = [get_weather_func_dict, jump_func_dict]

# Initialize the Agent with the LLM client, system message, and tools

agent = Agent(llm_client=client, system_message=system_message, tools=tools)

# Define a user message

user_message = {"role": "user", "content": "What is the weather in Virginia?"}

# Generate a response using the agent

response = agent.run(user_message)

responseChatCompletionMessage(content=None, role='assistant', function_call=None, tool_calls=[ChatCompletionMessageToolCall(id='call_HFyUnaAmRc9trG4HdBwdjg7v', function=Function(arguments='{\n "location": "Virginia"\n}', name='get_weather'), type='function')])

6️⃣ Capture Assistant's Response and Extract Tool Details

The LLM returned a message with the tool details and suggested arguments.

import json

tool_response = response.tool_calls[0]

tool_arguments = json.loads(tool_response.function.arguments)

tool_arguments{'location': 'Virginia'}

7️⃣ Execute the Tool with the Function Arguments

tool_execution_results = get_weather(**tool_arguments)

tool_execution_results'Virginia: 80F.'

8️⃣ Craft a Tool Message and Send It to the LLM for a final response

tool_message = {

"role": "tool",

"tool_call_id": tool_response.id,

"name": tool_response.function.name,

"content": str(tool_execution_results)

}

final_response = agent.run(tool_message)

final_responseChatCompletionMessage(content='The current weather in Virginia is 80°F.', role='assistant', function_call=None, tool_calls=None)

Awesome, right? But how do we ensure the tool arguments are valid? 🤔

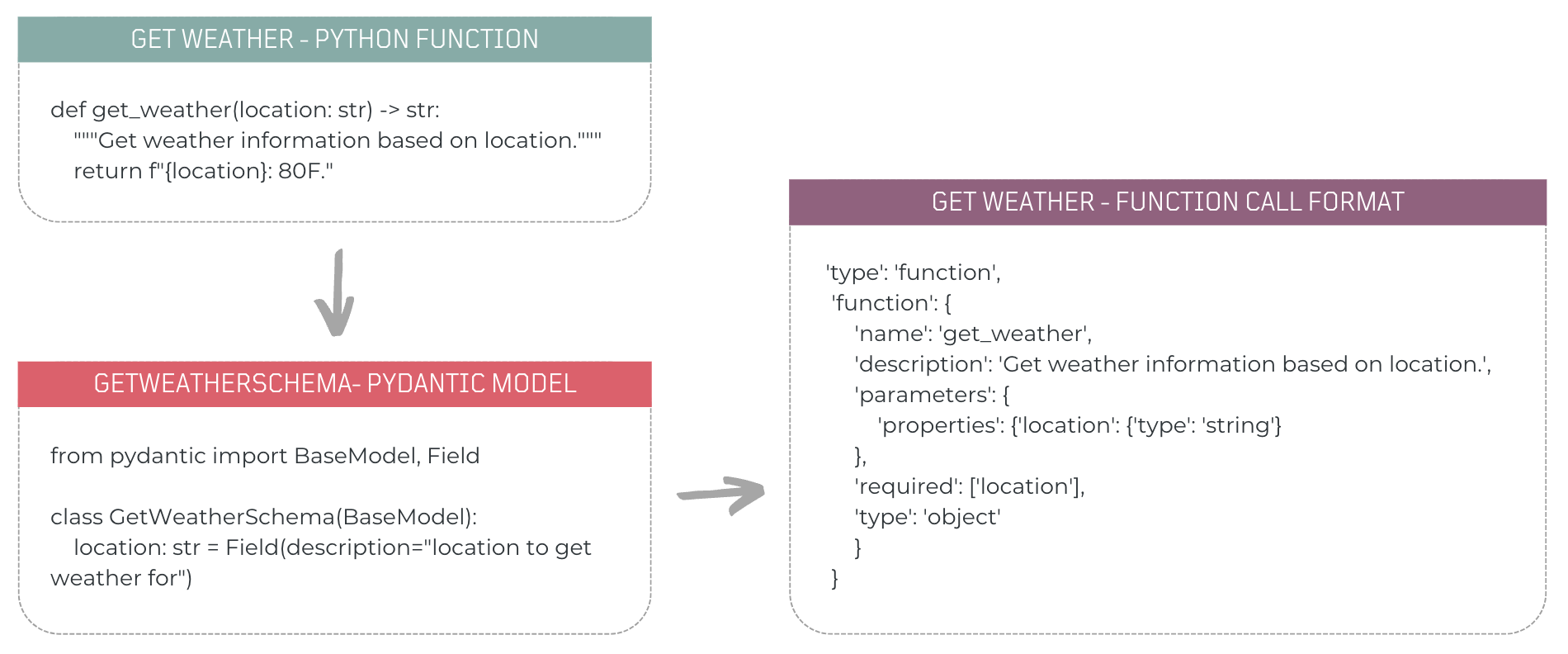

Enter Pydantic: Validating Tool Arguments 🔍

Pydantic is a powerful library that leverages Python type hints for efficient data validation and serialization. Using Pydantic models, we can define and validate tool arguments, ensuring correct data types and structure.

Define Tool Argument Schema as a Pydantic Model

First, I defined the tool's argument schema using Pydantic to validate the suggested tool arguments. For example:

# Python function

def get_weather(location: str) -> str:

"""Get weather information based on location."""

return f"{location}: 80F."

# Pydantic Model

from pydantic import BaseModel, Field

class GetWeatherSchema(BaseModel):

"""Get weather information based on location."""

location: str = Field(description="Location to get weather for")Validate Suggested Tool Argument Schema

To validate the suggested tool argument schema, follow the previous steps up to initializing the agent and generating a response.

6️⃣ Capture Assistant's Response and Extract Tool Details

The LLM returned a message with the tool details and suggested arguments.

# Extract tool arguments (JSON string)

tool_response = response.tool_calls[0]

tool_arguments = tool_response.function.argumentsInstead of manually converting the JSON string, validate it directly with Pydantic's model_validate_json() utility.

response_model = GetWeatherSchema.model_validate_json(tool_arguments)

response_modelGetWeatherSchema(location='Virginia')

You can also generate JSON schemas from Pydantic models using Pydantic's model_json_schema() utility.

GetWeatherSchema.model_json_schema(){'description': 'Get weather information based on location',

'properties': {'location': {'description': 'location to get weather for',

'title': 'Location',

'type': 'string'}},

'required': ['location'],

'title': 'get_weather_schema',

'type': 'object'}

Formatting Tools for OpenAI Function Calls with Pydantic Models 🔥

With tool argument validation in place, I moved on to formatting tools for OpenAI's function calling feature using Pydantic models.

The idea is to use the tool's Pydantic model to create its function call format.

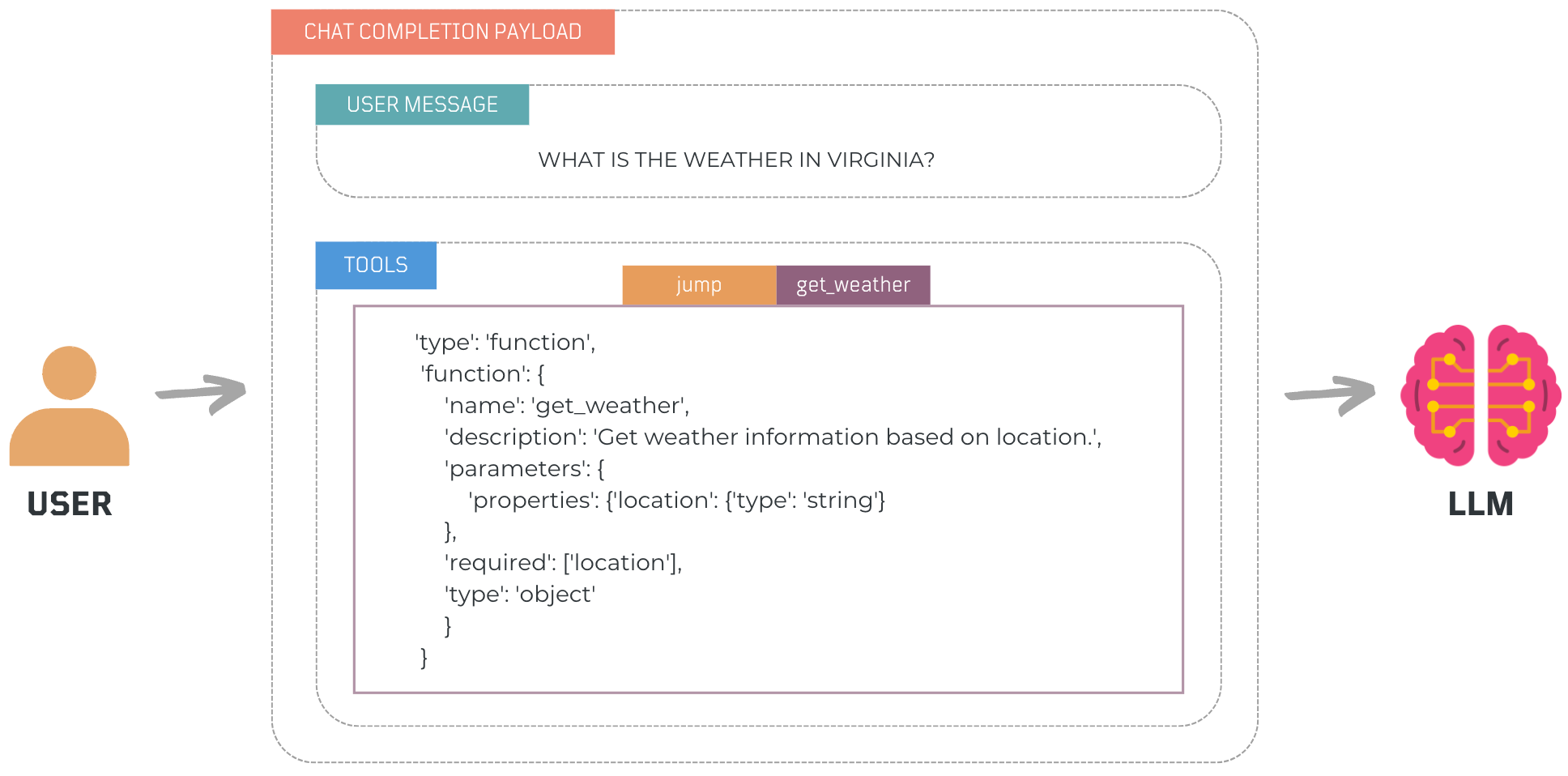

The OpenAI's Function Calling Format

The OpenAI's Function Calling feature requires tool definitions in a specific format, including the function's name, description, and parameters. This format now uses the tools parameter with slight variations.

"tools": [

{

"type": "function",

"function": {

"name": "function_name",

"description": "Function description. (optional)",

"parameters": "The parameters the functions accepts, described as a JSON Schema object. (optional)"

}

}

]From Pydantic Models to OpenAI Function Calls

I used the previous Pydantic model GetWeatherSchema and created a function to convert the tool's argument schema into OpenAI's Function Calling format.

def to_openai_function_call_definition(name: str, model: BaseModel):

schema_dict = model.model_json_schema()

description = schema_dict.pop("description", "")

schema_dict.pop("title", None) # Remove the title field to exclude the model name

return {

"type": "function",

"function": {

"name": name,

"description": description,

"parameters": schema_dict

}

}Testing this new capability:

function_call_definition = to_openai_function_call_definition("get_weather", GetWeatherSchema)

function_call_definition{'type': 'function',

'function': {'name': 'get_weather',

'description': 'Get weather information based on location',

'parameters': {'properties': {'location': {'description': 'location to get weather for',

'title': 'Location',

'type': 'string'}},

'required': ['location'],

'type': 'object'}}}

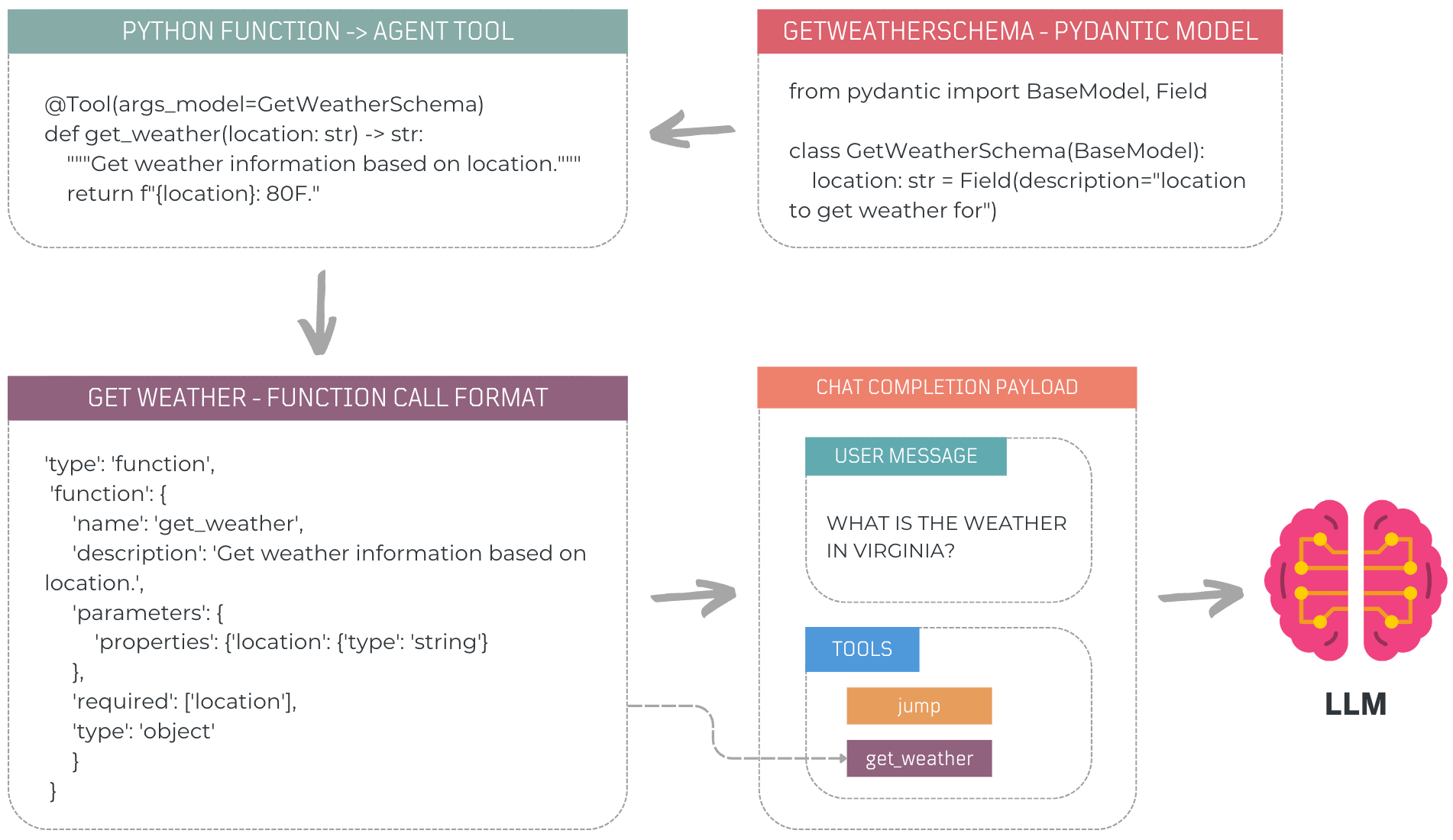

Streamlining the Agent Tool Concept ⚒️

To streamline the process of converting Python functions to OpenAI's Function Calling format, I created a dedicated class.

The Agent Tool Class 🔧

I developed the AgentTool class to encapsulate a Python function and its Pydantic model. This class includes methods for converting the tool to OpenAI's format and generating a JSON schema of the model.

from pydantic import BaseModel

from typing import Callable, Type

class AgentTool:

"""Encapsulates a Python function with Pydantic validation."""

def __init__(self, func: Callable, args_model: Type[BaseModel]):

self.func = func

self.args_model = args_model

self.name = func.__name__

self.description = func.__doc__ or self.args_schema.get('description', '')

def to_openai_function_call_definition(self) -> dict:

"""Converts the tool to OpenAI Function Calling format."""

schema_dict = self.args_schema

description = schema_dict.pop("description", "")

return {

"type": "function",

"function": {

"name": self.name,

"description": description,

"parameters": schema_dict

}

}

@property

def args_schema(self) -> dict:

"""Returns the tool's function argument schema as a dictionary."""

schema = self.args_model.model_json_schema()

schema.pop("title", None)

return schemaThe Tool Decorator ⚙️

To simplify creating AgentTool instances, I developed a Python Decorator. It ensures a function has a docstring and wraps it as an AgentTool with its model.

from typing import Callable, Optional, Type

from pydantic import BaseModel

def check_docstring(func: Callable):

"""Ensure the function has a docstring."""

if not func.__doc__:

raise ValueError(f"Function '{func.__name__}' must have a docstring.")

def Tool(func: Optional[Callable] = None, *, args_model: Type[BaseModel]) -> AgentTool:

"""Decorator to wrap a function with an AgentTool instance."""

def decorator(f: Callable) -> AgentTool:

check_docstring(f)

return AgentTool(f, args_model=args_model)

return decorator(func) if func else decoratorYou could then use the Tool decorator to create an AgentTool and convert it to OpenAI's Function Calling format.

# Python function arguments schema

from pydantic import BaseModel, Field

class GetWeatherSchema(BaseModel):

location: str = Field(description="location to get weather for")

@Tool(args_model=GetWeatherSchema)

def get_weather(location: str) -> str:

"""Get weather information based on location."""

return f"{location}: 80F."

# Convert the AgentTool to OpenAI's Function Calling format

get_weather.to_openai_function_call_definition(){'type': 'function',

'function': {'name': 'get_weather',

'description': '',

'parameters': {'properties': {'location': {'description': 'location to get weather for',

'title': 'Location',

'type': 'string'}},

'required': ['location'],

'type': 'object'}}}

Validating Tool Arguments before Agent Tool Execution

To ensure tool arguments were validated before execution, I added functionality to the AgentTool class. This involved creating a method to validate JSON strings using Pydantic's model_validate_json() utility and modifying the call function to validate parameters before executing the tool.

Here are the new methods added to the AgentTool class:

from pydantic import BaseModel, ValidationError

from typing import Callable, Type

from inspect import signature

class AgentTool:

# Existing code...

def validate_json_args(self, json_string: str) -> bool:

"""Validate JSON string using the Pydantic model."""

try:

validated_args = self.args_model.model_validate_json(json_string)

return isinstance(validated_args, self.args_model)

except ValidationError:

return False

def run(self, *args, **kwargs) -> Any:

"""Execute the function with validated arguments."""

try:

# Handle positional arguments by converting them to keyword arguments

if args:

sig = signature(self.func)

arg_names = list(sig.parameters.keys())

kwargs.update(dict(zip(arg_names, args)))

# Validate arguments with the provided Pydantic schema

validated_args = self.args_model(**kwargs)

return self.func(**validated_args.model_dump())

except ValidationError as e:

raise ValueError(f"Argument validation failed for tool '{self.name}': {str(e)}")

except Exception as e:

raise ValueError(f"An error occurred during the execution of tool '{self.name}': {str(e)}")

def __call__(self, *args, **kwargs) -> Any:

"""Allow the AgentTool instance to be called like a regular function."""

return self.run(*args, **kwargs)With the new methods in the AgentTool class, you can now validate tool arguments and execute the function with validated parameters. Similar to the previous example, initialize an AgentTool with the Tool decorator. Then, validate a dummy tool argument JSON string and run the tool:

tool_json_arguments = '{\n "location": "Virginia"\n}'

# Validate the JSON string arguments

get_weather.validate_json_args(tool_json_arguments) # True

# Convert JSON string to dictionary and run the tool

tool_arguments_dict = json.loads(tool_json_arguments)

get_weather.run(**tool_arguments_dict)'Virginia: 80F.'

One Final Recap! 😅 Just to Clarify! 📝

With these updates, I defined a Pydantic model for function arguments, used the Tool decorator to create an AgentTool, converted it to OpenAI's Function Calling format, and sent it along with a user message to the LLM.

When the LLM suggests tool calls, we validate the arguments with AgentTool's validate_json_args method. Once validated, we run the tool.

Operationalizing Tool Execution ⚒️ -> ⚡️

After setting up our agent to select and prepare tools with the help of LLMs, the next step was to manage these tools effectively. It was essential to have a system that could handle tool availability and execution seamlessly.

The Agent Tool Executor Class

I created an AgentToolExecutor class to manage the agent's interaction with tools. This class ensures tools are registered, managed, and executed properly, bridging the gap between choosing the right tool and executing it effectively.

from typing import Any, Dict, List, Optional

class AgentToolExecutor:

"""Manages tool registration and execution."""

def __init__(self, tools: Optional[List[AgentTool]] = None):

self.tools: Dict[str, AgentTool] = {}

if tools:

for tool in tools:

self.register_tool(tool)

def register_tool(self, tool: AgentTool):

"""Registers a tool."""

if tool.name in self.tools:

raise ValueError(f"Tool '{tool.name}' is already registered.")

self.tools[tool.name] = tool

def execute(self, tool_name: str, *args, **kwargs) -> Any:

"""Executes a tool by name with given arguments."""

tool = self.tools.get(tool_name)

if not tool:

raise ValueError(f"Tool '{tool_name}' not found.")

try:

return tool(*args, **kwargs)

except Exception as e:

raise ValueError(f"Error executing tool '{tool_name}': {e}") from e

def get_tool_names(self) -> List[str]:

"""Returns a list of all registered tool names."""

return list(self.tools.keys())

def get_tool_details(self) -> str:

"""Returns details of all registered tools."""

tools_info = [f"{tool.name}: {tool.description} Args schema: {tool.args_schema['properties']}" for tool in self.tools.values()]

return '\n'.join(tools_info)🚦Assembly Line: Integrating Tool Executor with AI Agent 🤖

With the main components nearly complete, I refined the Agent base class by registering tools as AgentTool objects, converting them to OpenAI's Function Calling format, and managing tool calls using the AgentToolExecutor. Each tool execution was recorded in a tool_history array and relayed back to the LLM, ensuring a seamless flow of interaction.

I introduced a parse_response method to handle LLM responses suggesting tool executions. This method checks for tools, executes them, and updates the tool history for accurate context. Here's how I implemented these capabilities:

import logging

from typing import Dict, List, Optional

logger = logging.getLogger(__name__)

class Agent:

"""Integrates LLM client, tools, memory, and manages tool executions."""

def __init__(self, llm_client, system_message: Dict[str, str], max_iterations: int = 10, tools: Optional[List[AgentTool]] = None):

self.llm_client = llm_client

self.executor = AgentToolExecutor()

self.memory = ChatMessageMemory()

self.system_message = system_message

self.max_iterations = max_iterations

self.tool_history = []

self.function_calls = None

# Register and convert tools

if tools:

for tool in tools:

self.executor.register_tool(tool)

self.function_calls = [tool.to_openai_function_call_definition() for tool in tools]

def run(self, user_message: Dict[str, str]):

"""Generates responses, manages tool calls, and updates memory."""

self.memory.add_message(user_message)

for _ in range(self.max_iterations):

chat_history = [self.system_message] + self.memory.get_messages() + self.tool_history

response = self.llm_client.generate(chat_history, tools=self.function_calls)

if self.parse_response(response):

continue

else:

self.memory.add_message(response)

self.tool_history = []

return response

def parse_response(self, response) -> bool:

"""Executes tool calls suggested by the LLM and updates tool history."""

import json

if response.tool_calls:

self.tool_history.append(response)

for tool in response.tool_calls:

tool_name = tool.function.name

tool_args = tool.function.arguments

tool_args_dict = json.loads(tool_args)

try:

logger.info(f"Executing {tool_name} with args: {tool_args}")

execution_results = self.executor.execute(tool_name, **tool_args_dict)

self.tool_history.append({

"role": "tool",

"tool_call_id": tool.id,

"name": tool_name,

"content": str(execution_results)

})

except Exception as e:

raise ValueError(f"Execution error in tool '{tool_name}': {e}") from e

return True

return FalseTo test the new Agent class, follow the steps in previous examples. Initialize the client, define tools with the Tool decorator, initialize the agent with those tools and the LLM client, and finally, run the agent with your query.

# Set Environment Variables

from dotenv import load_dotenv

load_dotenv() # take environment variables from .env.

# Initialize LLM Client

client = OpenAIChatCompletion(base_url='https://api.openai.com/v1', model='gpt-4')

# Initialize Agent with tools and LLM client

# Define Agent Tools (jump and get_weather) with their respective Pydantic modelsdescribing their argument schema.

...

.....

# Define the system message

system_message = {"role": "system", "content": "You are a helpful assistant."}

# Initialize the Agent with the LLM client, system message, and tools

agent = Agent(llm_client=client, system_message=system_message, tools=tools)

# Run Task

# Define a user message

user_message = {"role": "user", "content": "What is the weather in Virginia?"}

# Generate a response using the agent

response = agent.run(user_message)

responseChatCompletionMessage(content='The weather in Virginia is currently 80°F.', role='assistant', function_call=None, tool_calls=None)

Would it Handle Multiple Tool Executions? 😎

You can adjust the user message to ask a question that will require a tool to run multiple times. You can enable logging to see tools being executed.

# Enable Logging

import logging

logging.basicConfig(level=logging.INFO)

# Reset previous interactions

agent.memory.reset_memory()

# Define a new task

user_message = {"role": "user", "content": "What is the weather in Virginia, Washington and New York?"}

# Generate a response using the agent

response = agent.run(user_message)

responseINFO:__main__:Executing get_weather with args: {"location": "Virginia"}

INFO:__main__:Executing get_weather with args: {"location": "Washington"}

INFO:__main__:Executing get_weather with args: {"location": "New York"}

ChatCompletionMessage(content='The current weather is:\n\n- Virginia: 80°F\n- Washington: 80°F\n- New York: 80°F', role='assistant', function_call=None, tool_calls=None)

Even though the dummy function always returns the same result, the get_weather tool was executed 3 times. Up to this point, I had a basic functional LLM-based AI agent using Function Calling capabilities.

The following notebook contains all the code to build this agent, which I will modify in the next sections:

What Models Support Function Calling? 🧠

What About Models That Do Not Support Function Calling? 🗣️ 🧠

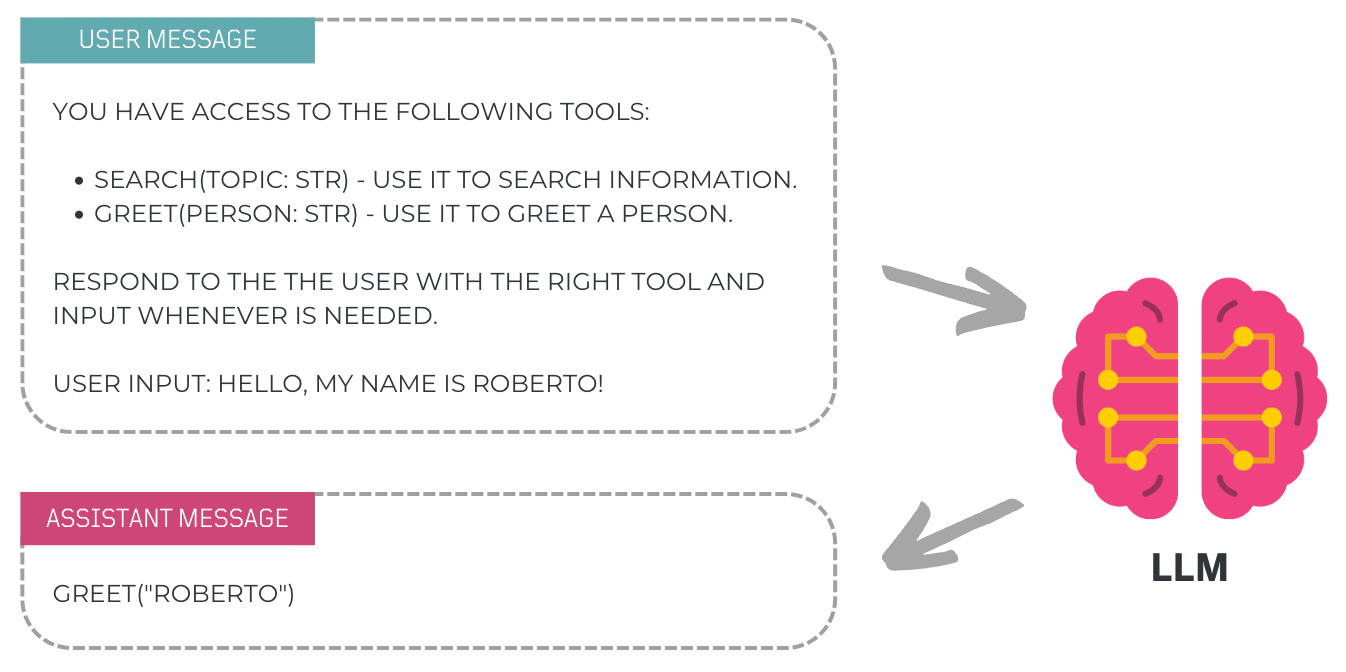

For models without Function Calling, we use prompt engineering to add decision-making logic directly into the prompts. This guides the LLM to choose the right tools based on the prompts and user's intent.

Selecting the Right Tool via Prompting 🗣️

To make the LLM select the right tool, include context about tools and their argument schemas in the prompt. Then, instruct the LLM to return the name of the chosen tool along with the suggested tool arguments.

🗣️ "You have access to the following tools" -> 🧠

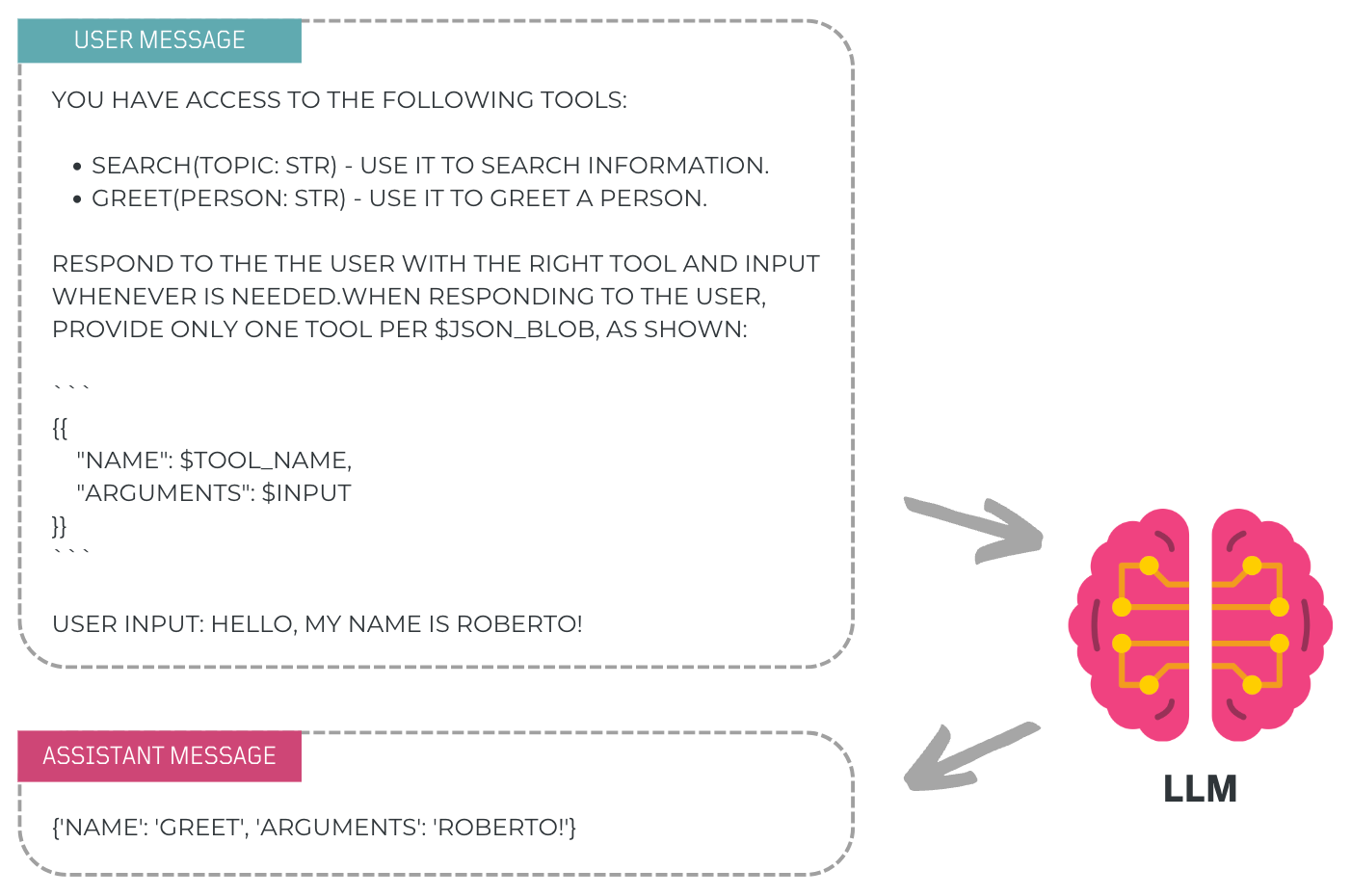

🗣️ "Use a JSON Blob to Specify a Tool" -> 🧠

Instruct the LLM to return tool details in formats like JSON, XML, or YAML. For example, requesting a JSON blob helps the LLM structure its response as a JSON object, which the agent can then parse and validate against a predefined schema.

You can test this with our current agent by sending a prompt that includes tool details and an instruction to respond with a JSON object. No AgentTool.

# Setup client with environment variables

client = OpenAIChatCompletion(base_url='https://api.openai.com/v1', model='gpt-4')

# Define the system message

system_message = {"role": "system", "content": "You are a helpful assistant."}

# Initialize the Agent with the LLM client and system message

agent = Agent(llm_client=client, system_message=system_message)

USER_PROMPT = """

You have access to the following tools:

search(topic: str) - Use it to search information.

greet(person: str) - Use it to greet a person.

Respond to the user with the right tool and input whenever is needed.

When responding to the user, provide only ONE tool per $JSON_BLOB, as shown in the example below delimited by triple backticks:

```

{{

"name": $TOOL_NAME,

"arguments": $INPUT

}}

```

User input: Hello, this is roberto!

"""

# Define a user message

user_message = {"role": "user", "content": USER_PROMPT}

# Generate a response using the agent

response = agent.run(user_message)

responseChatCompletionMessage(content='{\n "name": "greet",\n "arguments": "Roberto"\n}', role='assistant', function_call=None, tool_calls=None)

After receiving the response, pray 😅 and parse the message content:

import json

json.loads(response.content){'name': 'greet', 'arguments': 'roberto'}

What about JSON Within Text? 🔍

Instructing an LLM to output JSON can result in mixed explanatory text and structured responses. When this happens, we can use regex patterns to extract the JSON embedded within the text.

For parsing complex nested JSON structures, Python's re module isn't sufficient since it lacks nested pattern matching support. The regex library, however, allows recursion with patterns like r'\{(?:[^{}]|(?R))*\}', enabling effective parsing of deeply nested JSON data. (https://stackoverflow.com/a/54235803).

import regex

import json

def parse_nested_json(text):

# Unescape backslashes

text = text.replace('\\\\n', '\\n').replace('\\n', '\n').replace('\\\'', '\'').replace('\\\\', '\\')

# Replace double curly braces with single curly braces

text = text.replace('{{', '{').replace('}}', '}')

pattern = regex.compile(r'\{(?:[^{}]|(?R))*\}') # Supports nested structures

match = pattern.search(text)

if match:

try:

return json.loads(match.group())

except json.JSONDecodeError: # Corrected to use json.JSONDecodeError

pass

return None # No valid JSON found or parsing errorImplementing a Prompt Formatter 🗣️

It became clear that I needed a way to dynamically craft prompt templates that could incorporate elements like tool details, user input, and even chat history. To achieve this, I created the StringPromptTemplate class.

The String Prompt Template Class

import re

class StringPromptTemplate:

"""Handles dynamic prompt formatting for an AI agent."""

def __init__(self, template: str):

"""Initializes the prompt template and extracts variables."""

self.template = template

self.variables = {}

self.required_variables = self.extract_variables()

def extract_variables(self):

"""Extracts placeholders from the template."""

return set(re.findall(r'\{(.*?)\}', self.template))

def update_variables(self, **kwargs):

"""Updates template variables."""

self.variables.update(kwargs)

self.required_variables -= set(kwargs.keys())

def format_prompt(self, **kwargs):

"""Generates a formatted prompt and tracks remaining variables."""

combined_variables = {**self.variables, **kwargs}

self.required_variables -= set(kwargs.keys())

return self.template.format(**combined_variables)Test the new class by updating the USER_PROMPT string, replacing hardcoded tools with a {tool_details} placeholder and initializing an instance.

USER_PROMPT = """

You have access to the following tools:

{tool_details}

Respond to the user with the right tool and input whenever is needed.

When responding to the user, provide only ONE tool per $JSON_BLOB, as shown in the example below delimited by triple backticks:

```

{{

"name": $TOOL_NAME,

"arguments": $INPUT

}}

```

User input: What is the weather in Virginia?

"""

formatter = StringPromptTemplate(USER_PROMPT)Then, define previous tools with their respective Pydantic models.

# Python function arguments schema

from pydantic import BaseModel, Field

class GetWeatherSchema(BaseModel):

location: str = Field(description="location to get weather for")

@Tool(args_model=GetWeatherSchema)

def get_weather(location: str) -> str:

"""Get weather information based on location."""

return f"{location}: 80F."

class JumpSchema(BaseModel):

distance: str = Field(description="Distance for agent to jump")

@Tool(args_model=JumpSchema)

def jump(distance: str) -> str:

"""Jump a specific distance."""

return f"I jumped the following distance {distance}"

tools = [get_weather,jump]Get tool details and replace the tool_details placeholder.

# Get tool details

tools_info = [f"{tool.name}: {tool.description} Args schema: {tool.args_schema['properties']}" for tool in tools]

tool_details = '\n'.join(tools_info)

user_prompt_formatted = formatter.format_prompt(tool_details=tool_details)

print(user_prompt_formatted)You have access to the following tools:

get_weather: Get weather information based on location. Args schema: {'location': {'description': 'location to get weather for', 'title': 'Location', 'type': 'string'}}

jump: Jump a specific distance. Args schema: {'distance': {'description': 'Distance for agent to jump', 'title': 'Distance', 'type': 'string'}}

Respond to the user with the right tool and input whenever is needed...

🚦Assembly Line: Integrating Prompt Formatter with AI Agent

With control over the prompt, I needed to adapt the agent class to choose the right tools based on the prompt rather than the OpenAI's Function Calling feature. The new class integrates the prompt formatter, dynamically processing the prompt template each time the agent runs.

import logging

from typing import Dict, List, Optional

logger = logging.getLogger(__name__)

class Agent:

"""Integrates key components and manages tool executions."""

def __init__(self, llm_client, system_message: Dict[str, str], max_iterations: int = 10, tools: Optional[List[AgentTool]] = None, prompt_template: StringPromptTemplate = None):

self.llm_client = llm_client

self.executor = AgentToolExecutor()

self.memory = ChatMessageMemory()

self.system_message = system_message

self.max_iterations = max_iterations

self.tool_history = []

self.function_calls = None

self.prompt_template = prompt_template

if tools:

for tool in tools:

self.executor.register_tool(tool)

self.function_calls = [tool.to_openai_function_call_definition() for tool in tools]

tool_details = self.executor.get_tool_details()

tool_names = ' or '.join(self.executor.get_tool_names())

self.prompt_template.update_variables(

system_message=self.system_message,

tool_details=tool_details,

tool_names=tool_names

)

def run(self, task: str):

"""Generates responses, manages tool calls, and updates memory."""

self.memory.add_message({"role": "user", "content": task})

for _ in range(self.max_iterations):

chat_history = self.messages_to_string()

formatted_message = self.prompt_template.format_prompt(chat_history=chat_history, user_input=task)

messages = [{"role": "user", "content": formatted_message}]

response = self.llm_client.generate(messages=messages)

self.memory.add_conversation(user_message={"role": "user", "content": task}, assistant_message=response)

return response

def parse_response(self):

"""Parses LLM response."""

pass

def messages_to_string(self) -> str:

"""Converts messages to a string."""

formatted_messages = []

for message in self.memory.get_messages():

formatted_messages.append(f"{message['role'].capitalize()}: {message['content']}")

return "\n".join(formatted_messages)Enable Tool Execution v2 ⚒️

To handle tool execution, update the run method to parse the LLM response and execute suggested tools. We are not enabling any execution loops yet.

def run(self, task: str):

"""Generates responses, manages tool calls, and updates memory."""

self.memory.add_message({"role": "user", "content": task})

for _ in range(self.max_iterations):

chat_history = self.messages_to_string()

formatted_message = self.prompt_template.format_prompt(chat_history=chat_history, user_input=task)

messages = [{"role": "user", "content": formatted_message}]

response = self.llm_client.generate(messages=messages)

action_dict = self.parse_response(response)

if action_dict:

action_name = action_dict["name"]

action_arguments = action_dict["arguments"]

logger.info(f"Executing {action_name} with arguments {action_arguments}")

execution_results = self.executor.execute(action_name, **action_arguments)

return execution_results

else:

logger.info("Agent is responding directly.")

self.memory.add_conversation(user_message={"role": "user", "content": task}, assistant_message=response)

return response

def parse_response(self, response: Dict):

"""Extracts tools or continues the conversation."""

import regex, json

pattern = regex.compile(r'\{(?:[^{}]|(?R))*\}') # Supports nested structures

message_content = response.content

# Unescape backslashes

message_content = message_content.replace('\\\\n', '\\n').replace('\\n', '\n').replace('\\\'', '\'').replace('\\\\', '\\')

# Replace double curly braces with single curly braces

message_content = message_content.replace('{{', '{').replace('}}', '}')

match = pattern.search(message_content)

if match:

action_content = match.group()

try:

action_dict = json.loads(action_content.strip())

return action_dict

except json.JSONDecodeError:

raise ValueError("Invalid JSON in action content")

return NoneYou can test this new agent mode with the following prompt template:

STRING_PROMPT_TEMPLATE = """

{system_message}. You have access to the following tools:

{tool_details}

Respond to the the user with the right tool and input whenever is needed.

Valid tool name values: {tool_names}.

When responding to the user, provide only ONE tool per $JSON_BLOB, as shown in the example below delimited by triple backticks:

```

{{

"name": $TOOL_NAME,

"arguments": $INPUT

}}

```

Previous conversation history:

{chat_history}

New conversation:

{user_input}

"""Initialize the prompt template as a StringPromptTemplate:

prompt_template = StringPromptTemplate(STRING_PROMPT_TEMPLATE)Initialize agent with an LLM client, system message, tools and prompt template.

# Initialize LLM client

client = OpenAIChatCompletion(base_url='https://api.openai.com/v1', model='gpt-4')

# Define the system message

system_message = {"role": "system", "content": "You are a helpful assistant."}

# Initialize the Agent with the LLM client and system message

agent = Agent(llm_client=client, system_message=system_message, tools=tools, prompt_template=prompt_template)Finally, run the Agent.

agent.run("What is the weather in New York?")'New York: 80F.'

While I could drive reasoning via the prompt and execute suggested tools, I needed to manage the entire loop of reasoning, tool selection, action, and repetition. Unlike OpenAI's Function Calling, which handled this loop with Tool messages, I had to implement this logic through prompting techniques.

My next step was to create a method to ensure the LLM reasoned over the results and suggested the next steps effectively, maintaining the interaction flow

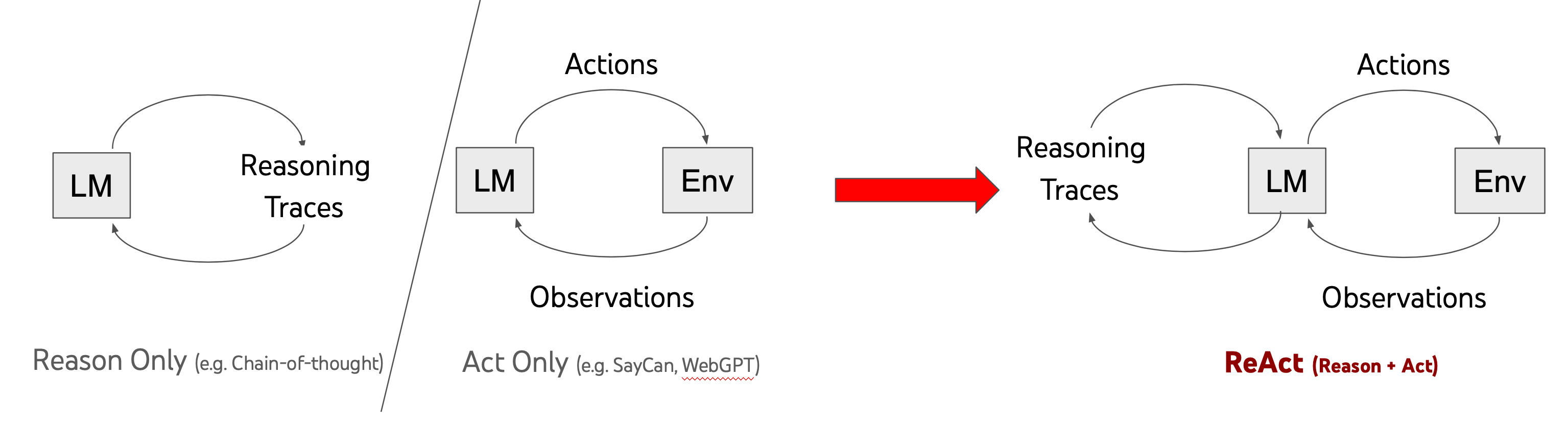

Enter ReAct (Reason 🧠+ Act ⚡️)!

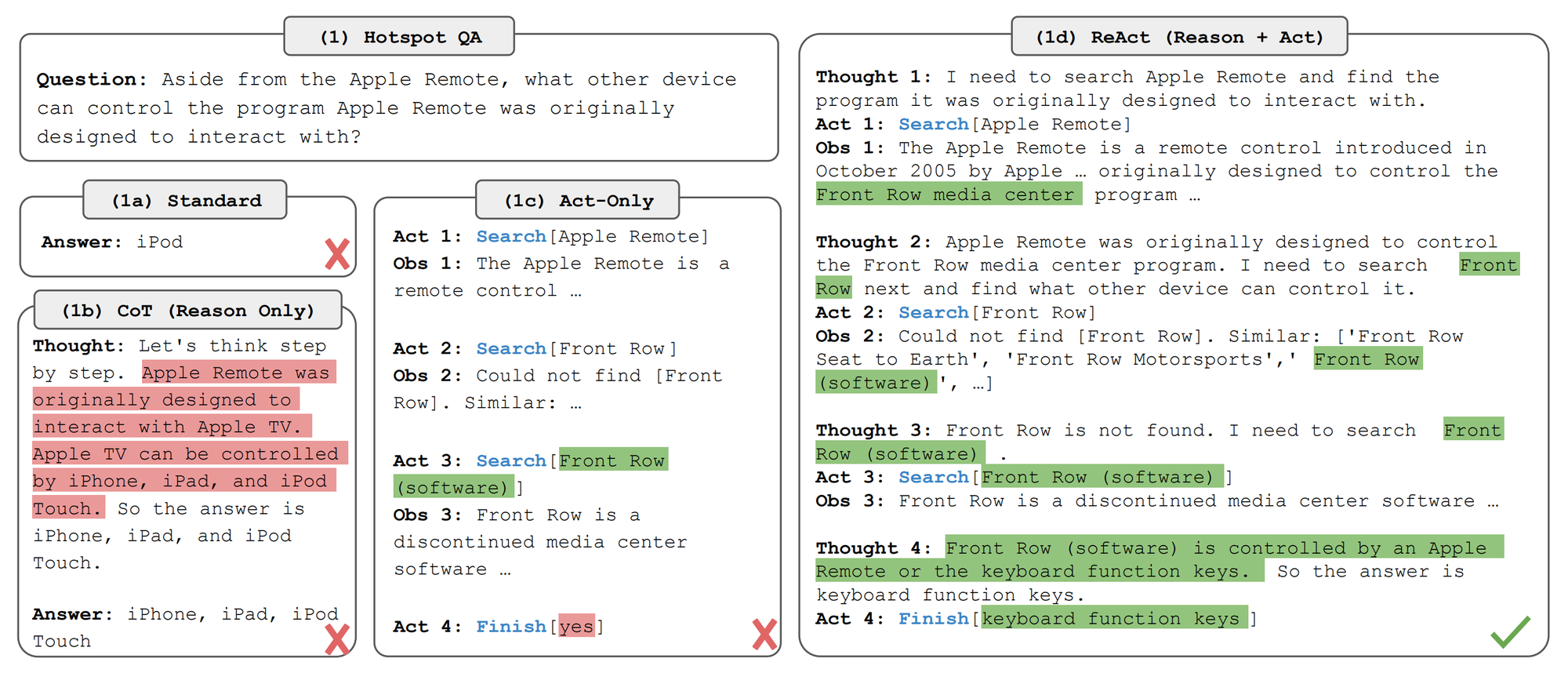

In 2022, ReAct was introduced to combine reasoning and acting, enabling LLM-based AI agents to not only figure things out but also to interact with the world around them, making decisions and taking steps based on its reasoning.

ReAct involves AI agents thinking, acting, and observing:

- Thought: Agent analyzes the situation and generates a thought.

- Action: Based on the thought, the agent takes an action.

- Observation: After acting, the agent observes the outcome to assess the impact and effectiveness.

Agents adapt these steps based on the task. For deep thinking tasks, the agent cycles through thinking, acting, and observing repeatedly. In action-heavy tasks, the agent thinks at key moments, deciding when to act. This flexibility helps the agent handle various scenarios efficiently.

ReAct Prompting 🗣️

Inspired by LangChain's prompt template, I created a prompt that structures the agent's reasoning, acting, and observing into a seamless loop.

# References:

# https://smith.langchain.com/hub/hwchase17/react-chat

# https://smith.langchain.com/hub/hwchase17/structured-chat-agent

STRING_PROMPT_TEMPLATE = """

{system_message}. You have access to the following tools:

{tool_details}

Respond to the the user with the right tool and input whenever is needed.

Use a json blob to specify a tool by providing a name key (tool name) and an arguments key (tool input).

Valid "action" values: {tool_names}

Provide only ONE action per $JSON_BLOB, as shown:

```

{{

"name": $TOOL_NAME,

"arguments": $INPUT

}}

```

Follow this format:

Question: input question to answer

Thought: reasoning about previous and subsequent steps

Action:

```

$JSON_BLOB

```

Observation: action result

... (repeat Thought/Action/Observation N times)

Thought: I know what to respond as final answer. Using tool to provide final answer

Final Answer: Final response to human

Remember to ALWAYS respond with a valid json blob of a single action when a tool MUST be used.

Use tools if necessary. Respond directly if appropriate.

Format is Action:```$JSON_BLOB```then Observation'

Previous conversation history:

{chat_history}

New conversation:

Question: {user_input}

{react_loop}

"""🚦Assembly Line: Integrating ReAct Prompt with AI Agent 🤖

I had to update the run method a little bit to handle this new reasoning loop.

import logging

from typing import Dict, List, Optional

from pydantic import BaseModel

from typing import List, Dict

logger = logging.getLogger(__name__)

class Agent:

"""

Basic Agent class responsible for integrating key components such as the LLM client, tools, memory, and managing tool executions.

"""

def __init__(self, llm_client, system_message: Dict[str, str], max_iterations: int = 10, tools: Optional[List[AgentTool]] = None, prompt_template : StringPromptTemplate=None):

self.llm_client = llm_client

self.executor = AgentToolExecutor()

self.memory = ChatMessageMemory()

self.system_message = system_message

self.max_iterations = max_iterations

self.tool_history = []

self.function_calls = None

self.prompt_template = prompt_template # Instance of StringPromptTemplate or similar

# Register each tool passed to the Agent using the executor

if tools:

for tool in tools:

# Register Agent Tools

self.executor.register_tool(tool)

# Convert Agent Tools

self.function_calls = [tool.to_openai_function_call_definition() for tool in tools]

# Pre-fill the prompt template with initial variables

tool_details=self.executor.get_tool_details(),

tool_names=' or '.join(self.executor.get_tool_names())

self.prompt_template.update_variables(

system_message=self.system_message,

tool_details=tool_details,

tool_names=tool_names

)

def run(self, task:str):

# Get Chat History

chat_history = self.messages_to_string()

# Initialize ReAct Loop

react_loop = ""

# Showing Initial Task

print(f"Question: {task}")

for _ in range(self.max_iterations):

# Generate a dynamic prompt using variables

formatted_message = self.prompt_template.format_prompt(chat_history=chat_history,user_input=task,react_loop=react_loop)

# Define everything as a user message

messages = [{"role":"user", "content": formatted_message}]

# Instruct LLM to choose the right tool and respond with a structured output

response = self.llm_client.generate(messages=messages,stop=["\nObservation:"],)

# Parse response and extract tools if any

action_dict = self.parse_response(response)

if action_dict:

action_name = action_dict["name"]

action_arguments = action_dict["arguments"]

logger.info(f"Executing {action_name} with arguments {action_arguments}")

execution_results = self.executor.execute(action_name, **action_arguments)

current_iteration = f"\nThought: {response.content}\nObservation: {execution_results}"

react_loop += current_iteration

print(current_iteration)

else:

message_content = response.content

print(message_content)

if 'final answer' in str(message_content).lower():

final_message = str(message_content).lower().split("final answer:")[-1].strip()

response = {

"role": "assistant",

"content": final_message

}

logger.info("Agent is responding directly.")

self.memory.add_conversation(

user_message={"role":"user","content": task},

assistant_message=response

)

return response

def parse_response(self, response: Dict):

"""

Extracts tools or continues the conversation.

"""

import regex, json

pattern = regex.compile(r'\{(?:[^{}]|(?R))*\}') # Supports nested structures

message_content = response.content

# Unescape backslashes

message_content = message_content.replace('\\\\n', '\\n').replace('\\n', '\n').replace('\\\'', '\'').replace('\\\\', '\\')

# Replace double curly braces with single curly braces

message_content = message_content.replace('{{', '{').replace('}}', '}')

match = pattern.search(message_content)

if match:

action_content = match.group()

try:

action_dict = json.loads(action_content.strip())

return action_dict

except json.JSONDecodeError:

raise ValueError("Invalid JSON in action content")

def messages_to_string(self) -> str:

"""

Converts a list of message objects or dictionaries into a multi-line string representation.

"""

formatted_messages = []

for message in self.memory.get_messages():

if isinstance(message, BaseModel):

message = message.model_dump()

formatted_messages.append(f"{message["role"].capitalize()}: {message["content"]}")

return "\n".join(formatted_messages)I updated the LLM Client class to use the stop parameter in OpenAI's Chat Completion API, stopping token generation at \nObservation:

from typing import Dict, Any, List

import openai

class OpenAIChatCompletion:

"""Handles interaction with OpenAI's API for generating chat completions."""

def __init__(self, model: str, api_key: str = None, base_url: str = None):

self.client = openai.OpenAI(api_key=api_key, base_url=base_url)

self.model = model

def generate(self, messages: List[str], tools: List[Dict[str, Any]] = None, stop: List[str] = None, **kwargs) -> Dict[str, Any]:

"""Generate a response from OpenAI's API based on input messages."""

params = {

'messages': messages,

'model': self.model,

'tools': tools,

'stop': stop,

**kwargs

}

response = self.client.chat.completions.create(**params)

return response.choices[0].messageYou can test this new agent mode with the following prompt template:

prompt_template = StringPromptTemplate(STRING_PROMPT_TEMPLATE)

# Initialize LLM client

client = OpenAIChatCompletion(base_url='https://api.openai.com/v1', model='gpt-4')

# Define the system message

system_message = {"role": "system", "content": "You are a helpful assistant."}

# Initialize the Agent with the LLM client and system message

agent = Agent(llm_client=client, system_message=system_message, tools=tools, prompt_template=prompt_template)Run the agent with all those changes:

agent.run("What is the weather in New York?")Take a look at the ReAct Loop:

Question: What is the weather in New York?

Thought: Thought: The user is asking for the weather information in New York. I will use the "get_weather" tool to provide this information.

Action:

```

{

"name": "get_weather",

"arguments": {"location": "New York"}

}

```

Observation: New York: 80F.

Thought: I observed that the tool successfully retrieved the current temperature in New York, to which is 80F. Now I can respond to the user's query.

Final Answer: The current weather in New York is 80F.{'role': 'assistant', 'content': 'the current weather in new york is 80f.'}

The following notebook contains all the code to build this ReAct agent:

Challenges with LLM-Based AI Agents:

- Ensuring agents don’t unnecessarily use tools by including instructions and creating a "Human input tool."

- Parsing LLM output for tool invocation and handling inconsistent JSON responses with an

OutputParser. - Enabling memory of previous steps using retrieval methods for context management.

- Managing long observations by storing outputs and using retrieval techniques.

- Keeping the agents focused by reiterating objectives and breaking tasks into sub-objectives.

If you made it this far, thank you for taking the time to read my blog post! I hope you found it insightful and helpful. Stay tuned for more updates!

Future Work

- Share a cybersecurity use-case with these capabilities (next blog post 😎)

- Explore other reasoning techniques besides ReAct.

- Dive into long-term memory with knowledge graphs and vector stores.

References

- A Survey on Large Language Model based Autonomous Agents. https://arxiv.org/abs/2308.11432

- LLM Powered Autonomous Agents. https://lilianweng.github.io/posts/2023-06-23-agent/

- A Complete Guide to LLMs-based Autonomous Agents (Part I) https://medium.com/the-modern-scientist/a-complete-guide-to-llms-based-autonomous-agents-part-i-69515c016792

- Functions, Tools and Agents with Langchain. https://learn.deeplearning.ai/functions-tools-agents-langchain

- Pydantic is all you need. https://www.youtube.com/watch?v=yj-wSRJwrrc&t=6s

- Anatomy of an AI Agent. https://www.youtube.com/watch?v=zdwgIe4zdsU

- A Survey on Large Language Model based Autonomous Agents https://arxiv.org/pdf/2308.11432

- ReAct: Synergizing Reasoning and Acting in Language Models. https://arxiv.org/abs/2210.03629

- ReAct: Synergizing Reasoning and Acting in Language Models https://react-lm.github.io/

- Understanding ReACT with LangChain. https://www.youtube.com/watch?v=Eug2clsLtFs

- Cooking with Semantic Kernel: Recipes for Building Chatbots, Agents, and more with LLMs (2023). https://www.youtube.com/watch?v=AX8xM9YnV3k

- How to build a tool-using agent with LangChain. https://cookbook.openai.com/examples/how_to_build_a_tool-using_agent_with_langchain

- The Rise and Potential of Large Language Model Based Agents: A Survey. https://arxiv.org/abs/2309.07864

- Emergent autonomous scientific research capabilities of large language models. https://arxiv.org/abs/2304.05332

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. https://arxiv.org/abs/2201.11903

- Igniting Language Intelligence: The Hitchhiker's Guide From Chain-of-Thought Reasoning to Language Agents. https://arxiv.org/abs/2311.11797

- Building Custom Tools and Agents with LangChain (gpt-3.5-turbo). https://www.youtube.com/watch?v=biS8G8x8DdA

- Harrison Chase - Agents Masterclass from LangChain Founder (LLM Bootcamp). https://www.youtube.com/watch?v=DWUdGhRrv2c

- Building Context-Aware Reasoning Applications with LangChain and LangSmith // Harrison Chase // LLM3 https://www.youtube.com/watch?v=6Ld2c34ph1I

- Building Context-Aware Reasoning Applications with LangChain and LangSmith. https://www.youtube.com/watch?v=Hy08dbsfJGg

- Beyond Chain-of-Thought, Effective Graph-of-Thought Reasoning in Large Language Models. https://arxiv.org/abs/2305.16582

- LangChain Tools https://github.com/langchain-ai/langchain/blob/master/libs/core/langchain_core/tools.py

- LangChain Tool Calling https://blog.langchain.dev/tool-calling-with-langchain/